Journal of Defense Management

Open Access

ISSN: 2167-0374

ISSN: 2167-0374

Review Article - (2019)Volume 9, Issue 1

The purpose of this qualitative grounded theory study was to examine the definition of infrastructure readiness in terms of Navy laboratories, and to define the best criteria or approach for effectively managing readiness to meet changing technical mission requirements. Through semi structured interviews with leadership about their perceived definition of infrastructure readiness and perceived adequacy of the current model, clarity emerged on the criteria for adequately defining, measuring, and assessing Navy laboratory infrastructure readiness. This will allow further evaluation of the existing system and identification of any gaps or risks. The primary goal of this study was to create an accurate definition of Navy laboratory infrastructure readiness, and subsequently, the key attributes to that readiness. The second goal of the study was to research other readiness systems to assess their readiness elements, and measures to determine the applicability to Navy laboratories and the feasibility of adaption of their systems for Navy laboratory use. The third and the final goal of the study was to further research on how to utilize existing models and systems in other areas to apply to any gaps identified through the interview process of the qualitative study. The study found that infrastructure readiness is viewed holistically as an environment to perform work, and includes items outside the technical definition of infrastructure, to include items like space, laboratory equipment, and processes, and that there are significant gaps in the existing Navy infrastructure readiness systems to manage many of these areas. In addition, two management system approaches emerged, tactical and strategic. Tactical management is focused on real-time management of systems to ensure a readiness state, while strategic management is more of a capacity management system to ensure adequate capacity when needed.

Navy; Readiness; Infrastructure

Readiness is terminology utilized to define the state of many differing things. As Pam Powell discusses in her article “The Messiness of Readiness”, the definition of readiness is difficult to pin down and means different things to different people [1], resulting in difficulty defining and subsequently measuring readiness across the multitude of areas and capabilities it is applied. Readiness can be utilized to measure college readiness [2], the operational readiness to react to a disease outbreak [3] and even the preparedness of military units [4].

Readiness in the context of college and career readiness has been determined to be a critical measure, and factors such as involvement in high-rigor courses in a secondary curriculum are linked to outcomes in college enrollment, persistence, and graduation. Studies show that participation in college preparatory coursework and gateway courses, has a direct correlation with postsecondary outcomes [5]. These secondary preparatory courses also provide an opportunity for students to apply skills associated with time management, stress management, and how to study [5]. Secondary preparation courses and gateway courses and the measures associated with them have become important measures for student’s college and career readiness, although additional research is needed to look further at various types of education institutions and career opportunities.

Operational readiness in terms of disease outbreak is one of many other areas of readiness measure. A diverse field of disease modeling has emerged over the past 60 years, resulting in multiple, complex models that generate numerous scenarios [3]. The various scenarios can be looked at from the context of operational readiness or the ability to utilize the model in an operational setting. Although there is no specific model that exists for operational readiness for a disease outbreak, the terminology implies that there exists a methodology to define it. Corley (2014) identifies that operational readiness is “user, and intended use, dependent”, and that a model that one user identifies is accurate, may not be adequate for another user, especially according to their differing missions [3].

Military readiness, too, can be user, and intended use, dependent. Military readiness can be defined as the ability of forces to respond, when and where needed, to effectively carry out their missions, and can apply to multiple areas, and include equipment, supplies, people, and skill sets [6]. As described by Finch, the concept of readiness is easily understood, however, upon closer review, the areas are extremely diverse and must be balanced in a holistic and synergistic approach to create desired results.

One of the areas that is a significant contributing factor to military readiness is equipment [6]. Major Washington identifies equipment as one of the three pillars of fielding; equipment, personnel, and resources, with each area having a myriad of subordinate issues, such as research and development for equipment [7]. To take this a step further, the research and development of that critical equipment requires research and development laboratories that are capable of developing new, advanced, and innovative equipment to outpace the nation’s adversaries. Those laboratories require the people, processes, and laboratory spaces, or infrastructure to continuously develop that cutting-edge equipment.

Infrastructure is a broad area that encompasses a great deal of physical assets that create our environments, which Fulmer defined as “the physical components of interrelated systems providing commodities and services essential to enable, sustain, or enhance societal living conditions” [8]. This infrastructure is critical to daily life and includes items like the interstate system, the electrical grid, and even National Defense, which is a particular focus for this study. Infrastructure readiness is utilized to measure and discuss critical infrastructure in our society today. Readiness can be expensive to maintain, and often times considered wasteful if the readiness levels are considered excessive. Contrarily, it can be considered risky if areas are perceived as not being maintained to high enough levels [9].

This study starts by exploring the existing infrastructure management system utilized by the Navy and understanding the methodology and how the specific criteria are utilized to measure readiness. Research was completed on readiness measuring systems for other types of infrastructure to determine what kinds of readiness measurement systems exist today and what methodology and criteria are being utilized to determine if any of those systems or criteria can be utilized for more accurately describing and measuring Navy laboratory readiness. Naval Surface Warfare Center Crane (NSWC) leadership was interviewed to discuss readiness and gather data on their viewpoints of readiness systems and the effectiveness of the Navy laboratory readiness system. This effort better defined leadership’s definition of readiness and determined the current system is not considered adequate, and gaps in the system exist that need to be explored and filled.

Statement of problem

The United States National Defense is quickly losing their technological advantage over their adversaries. With the advent of the internet, their adversaries are able to replicate in a mere matter of months, the technology that took decades to develop. This is quickly closing the gap and eroding their advantage. The Navy laboratories are designed to be the innovative engines that provide innovative technological breakthroughs that maintain the nation’sadvantage. Due to the increased speed of their adversaries, the nation needs to become much more innovative and create solutions much more quickly. Innovation relies primarily on people, process, and resources, with infrastructure being a key element that is not currently getting equal consideration due to the fact that it is part of a larger management system. This is quickly causing constraints in the overall system, resulting in significant roadblocks and delays, and severely hindering innovation across these critical laboratories.

This area warrants further study to determine how to effectively define, measure, and subsequently manage Navy laboratory infrastructure readiness in support of innovation. The system utilized today for measure of Navy laboratory infrastructure readiness is more of an infrastructure management system that looks at component level items within a facility and determines the condition of each component [10]. While this may be effective at managing the lifecycle of each component within a facility, this needed to be assessed to determine if it is an effective way of measuring the true infrastructure readiness of a Navy laboratory.

Through research of the existing management system, research of leadership’s assessment of the ability of the existing management system, and comparison to other existing readiness systems, gaps emerged as future areas of focus. Those gaps in critical areas could help define requirements for improving the ability to measure and assess readiness, thereby identifying critical areas that need addressed that are currently being overlooked, not only in Navy laboratories, but in other critical infrastructure areas as well.

The electric grid is an example of an area where readiness was inaccurately assessed and monitored, resulting in catastrophic results. On August 14, 2003, large portions of the electric grid in the Northeastern United States and Southeastern Canada experienced blackouts for up to two days, resulting in an estimated 4 to 10 billion dollars in cost and 18.9 million lost work hours due to the outage. On the following day, the President of the United States, George W. Bush, and the Canadian Prime Minister Jean Chretien directed a joint U.S.-Canada Power System Outage Task Force established, resulting in a final report that stated that the blackout could have been avoided, and pointed primarily to enforcement of reliability standards and compliancy. This scenario is one of many that could be researched and utilized as an example and illustrates the complexity of the infrastructure system and the magnitude of the effects of an ill-defined and managed capability. It is currently unclear, nor proven, that the existing infrastructure management system for Navy laboratories is adequate at managing true infrastructure readiness, and therefore needs assessed to determine adequacy.

Purpose and goals

The purpose of this proposed qualitative grounded theory study was to examine the definition of infrastructure readiness in terms of Navy laboratories, and to define the best criteria or approach for effectively managing said readiness to meet changing technical mission requirements. Through semi structured interviews with leadership about their perceived definition of infrastructure readiness and perceived adequacy of the current model, clarity emerged on the criteria for adequately defining, measuring, and assessing Navy laboratory infrastructure readiness. This resulted in further evaluation of the existing system and identification of gaps and risks.

The primary goal of this study was to create an accurate definition of Navy laboratory infrastructure readiness, and subsequently, the key attributes to that readiness. The second goal of the study was to research other areas of infrastructure to assess their readiness elements, measures, and assessment systems to determine the applicability to Navy laboratories and the feasibility of adaption of their systems for Navy laboratory use. The third, and final goal of the study was to further research on how to utilize existing models and systems in other areas to apply to any gaps identified through the interview process of the qualitative study.

Research question

How does the Navy infrastructure management system maintain laboratory readiness? Sub questions that would need to be answered are: What is the role of the Navy laboratories, and how does their readiness affect the Navy? What are the areas of readiness for a Navy laboratory, and how does the specific area of infrastructure readiness impact the overall mission?

Definition of terms

Key terms that are relevant to this study are: Navy Laboratories and infrastructure readiness. Navy laboratories are the Navy sites that collectively make up the Naval Research and Development Establishment (NR&DE). These sites are the innovative engines that provide the technological breakthroughs to the fleet’s toughest problems. These laboratories include the people, processes, equipment, infrastructure, and intellectual capital that work as a system to provide innovative solutions to the warfighters toughest challenges to give our warfighters a decisive advantage over our adversaries.

Infrastructure readiness is defined as the state of preparation of infrastructure, or the ability of infrastructure to meet operational requirements. This includes the facility, specific features of the facility to support the operations, supporting attributes such as parking, ranges, and test fixtures, supporting utilities, and other associated supporting attributes. The readiness of infrastructure is the ability to support the capabilities and capacities required by said infrastructure. Powel states that NAVSEA is directed to manage ship material readiness, which may be the direct linkage to how infrastructure readiness requirements need to be established.

The approach for the literature review started with the overarching question, “How does the Navy infrastructure management system maintain laboratory readiness?”. Then the question, “What do the laboratories do for the Navy, and how does laboratory readiness relate to the Navy? Finally, “What are the areas of readiness for a laboratory, and how does the specific area of infrastructure readiness apply?”

Navy Laboratory Infrastructure Readiness

Readiness defined: Readiness is a state that means varying things to different people. Corey said it best when he stated that readiness is “user, and intended use, dependent” [3], and went on to say that it is dependent upon different missions. This is precisely the concern for Navy laboratories, and the reasoning behind the research within this paper.

Innovation engines: The Navy laboratories are the innovation engines for the Navy, providing innovative technological solutions to the fleet. These laboratories are a collection of sites, known as the NR&DE, and provide solutions to our fleet’s toughest challenges.

The NR&DE consists of the Naval Sea Systems Command (NAVSEA) Warfare Centers, Naval Air Command (NAVAIR) Warfare Centers, Space and Naval Warfare Systems Command (SPAWAR), Marine Corps Systems Command (MCSC), and Naval Research Laboratories (NRL). The sites are comprised of the land, buildings, test ranges, equipment, and the people, process, and knowledge required to provide innovative, technological solutions.

These innovation engines are responsible for ensuring the weapon systems and technology deployed to the fleet remain operational through software and hardware upgrades, as well as providing new and hardware and software to combat new threats identified in combat. The NR & DE laboratories are also responsible for designing, developing, testing, and evaluating major upgrades and “life-extensions” of these major systems. Finally, the NR & DE laboratories are responsible for producing game-changing, innovative solutions to combat emerging threats, or to continue to provide innovative solutions that provide our warfighters a decisive advantage over our adversaries.

The Navy is losing its technological edge, as technology that took decades to develop is replicated in other countries in months. John Kao contends that the United States is losing its innovative advantage, and if we do not wake up, the country is doomed to be a second rate country, outpaced by the likes of Singapore or China [11]. In contrast, Savitz and Tellis state that even though China, Asia, and other countries are outpacing the United States in areas like number of engineers and scientists graduating each year, which are indicators of innovation, the United States have not lost its innovative edge yet, primarily due to our culture of acceptance of failure [12]. Despite currently maintaining our innovative foothold, it is apparent that the game is changing, the technological lead has shortened, and if we do not make improvements, we will no longer remain a top country.

Laboratory elements: Navy laboratories rely on resources, processes, and culture to support an innovative environment. Each of these categories are broad, consisting of multiple sub-elements within each element. Resources consist of the sub-elements people, equipment, and infrastructure. Processes consist of processes for doing work, measuring work, ensuring innovation, measuring innovation, rewarding behavior, hiring practices, and retention practices. Culture is also broad, including behaviors desired to promote innovative cultures, reward systems to encourage certain behaviors, and how information is communicated internally and externally.

People: There are ongoing efforts focused on attracting and retaining the best and brightest engineers and scientists to the Navy laboratories through special hiring process. There are programs establish to train the organization in areas of innovation, and training programs to encourage higher level learning and degrees. There are processes in place to work at maintaining and improving our culture, and structure in place to promote innovation organically within the construct of the organization. There are incentives for patents, and programs to encourage employees to champion innovative projects through special authorities like Section 219 and Navy Innovative Science and Engineering (NISE). However, there are no mechanisms to ensure the resources, such as infrastructure, are supported at the same levels. In addition, as the demand for innovation soars to try and keep pace with our adversaries, the workforce is growing significantly, resulting in severe space constraints across the laboratories. To make matters worse, the model utilized to manage the infrastructure appears antiquated and inadequately funded. While the areas of people and process are being afforded the ability to go through innovative changes to promote innovate ways of doing things, the infrastructure management systems are not being afforded the same flexibilities. Just as Major [7] states that the Army’s current personnel readiness system is outdated due to the fact that it was designed for the industrial era that has passed, the infrastructure management system for the Navy laboratories too, appear outdated as they were designed for the same industrial era.

Infrastructure: The American Society of Civil Engineers has produced an infrastructure report card since 1988 via a congressionally chartered National Council on Public Works group resulting in a report titled Fragile Foundations: A Report on America’s Public Works and updated every four years (American Society of Civil Engineers, 2017). The report is a call to action for the civil engineering community and our legislators to focus on the nations failing infrastructure. The report card is divided into eight criteria of capacity, condition, funding, future need, operation and maintenance, public safety, resilience, and innovation. The criteria are utilized to grade sixteen areas or categories of infrastructure to provide an overall assessment of national infrastructure. The last report, 2017, graded twelve of the sixteen areas as a D. This report of the state of national assets, combined with concern over understood and agreed upon criteria for readiness, and first-hand knowledge of the state of Navy laboratories has led to focus on the problem of defining readiness for Navy laboratories.

The infrastructure aspects of a Navy laboratory are vast, including not only all of the buildings and equipment, but also the utilities that make them operate effectively. This includes the electricity and fiber optics, that if interrupted only briefly, could interrupt tests that have been ongoing for days, weeks, or even months. The spaces must be adequate in capacity, condition, and configuration to be able to be quickly and easily converted to meet changing mission requirements. The current infrastructure management system and associated tools and resourcing is inadequate to maintain the Navy laboratory infrastructure in an adequate state of readiness. To illustrate this, a study performed at the request of the Chief of Naval Operations by the National Research Council made a major recommendation that the Navy should change its statement of infrastructure vision to “Essential service at a minimum cost”. Not only is the infrastructure management system built to manage an outdated industrial age construct, its underlying premise is one of bare necessity, and not conducive to managing a highly technical laboratory that demands reliability and requires state of the art features to support highly technical requirements and the ability to attract and retain the best and brightest scientists and engineers.

Infrastructure management: The infrastructure management system currently being utilized is a component level, condition based system that utilizes a risk-based decision model. While this approach may be advantageous for large portfolios of buildings with industrial operations in a static setting, it creates challenges for dynamic laboratories creating cutting-edge innovation. Grussing also states that organizations often lack the data, tools, personnel, and experience to adequately analyze the data, recognize the trends, and predict failures, and balance investment [10].

The Navy laboratories may require a more robust system that is managed more effectively and focused on operational readiness versus component level life-cycle management. Multiple management systems exist, some that look at real-time risk probabilities that monitor system controls and compare operating activity to established baselines to determine if any risks exist within the system [13]. Various systems engineer based and riskbased systems exist that could yield better results for managing Navy laboratory infrastructure readiness.

This study was a good candidate for a qualitative study as it allowed leadership within the organization with historical knowledge an opportunity to provide their perspectives over the course of previous infrastructure management systems and the current system, which may not be able to be accomplished through quantitative studies. The study was best suited for and utilized a grounded theory approach, as it focuses on a management process.

The participants in this study were limited to Naval Surface Warfare Center (NSWC) Crane leadership with long histories in the organization. Although this group was assumed to represent the larger community of leaders across the NRDE, it could have some limitations. Since the participants are from one geographical location and the same organization, their views may have some variations from the entire community. The participants’ interviews took place face to face and were digitally recorded to ensure all information was collected accurately. The data was transcribed and analysis performed on the data by coding the data and then performing further analysis. Triangulation of data was utilized to ensure validity of results [14]. All research was completed in compliance with Institutional Review Board (IRB) requirements.

Participants

The participants for this study were limited to the leadership at NSWC Crane. The study was coordinated through the organization leadership and requested that the opportunity to participate was extended to the leadership team. Four participants were selected, and there were no limitations on age, sex, or ethnicity. The participants were asked to contact the researcher directly and anonymously, and once a list of willing participants was obtained, primary criterion sampling was utilized to narrow the list of participants to those with over 15 years of experience. Primary criterion sampling is the utilization of pre-specified criterion to down select from the total number of participants that are interested in the study, to ensure that the participants meet certain criterion, in this case, a minimum of 15 years of experience which ensures knowledge of multiple infrastructure management systems. This approach was utilized as it likely yielded a richer, more robust interview, as the participant have historical knowledge and perspective on multiple systems over a longer period of time.

Data collection

During the initial face to face interview, and prior to the commencement of the interview, the researcher covered all of the aspects of the interview. The researcher described to the participant the purpose of the research, that the interview would be audio recorded, that the interview would take approximately one hour, and that there are no direct costs or benefits to the participant. The researcher also described that if further information is required, they would be contacted for another interview that would require up to another hour. The researcher described that the conversation would be audio recorded utilizing a handheld digital audio recorder. The data was collected via digital recording, which ensured that all of the words from the participants were captured, more accurately portraying the thoughts and memories of the participants. The participants’ identities were kept anonymous by assigning pseudonyms for each participant in the manner of P1, P2, P3, and P4 to limit the risk of confidentiality breeches. The digital recordings were then transcribed by the researcher using NVivo software and multiple reviews of the audio recordings versus utilizing a research assistant in an effort to further reduce the risk of compromise. The recordings and transcriptions were then stored in an encrypted file on a password protected personal computer. The data will be destroyed three years after the completion of the research. Upon explanation of the process to the participants, the researcher asked if there are any questions and answered any outstanding questions the participants had. Once the questions were answered, the researcher asked the participants to sign a copy of the consent form.

Interviews were conducted via face to face interviews in a setting requested by the participants to ensure the participants were comfortable in their environment and were willing to participate for the requested one-hour duration of the interview. Upon receipt of the signed consent forms from the participants, the interviews were scheduled.

Data analysis

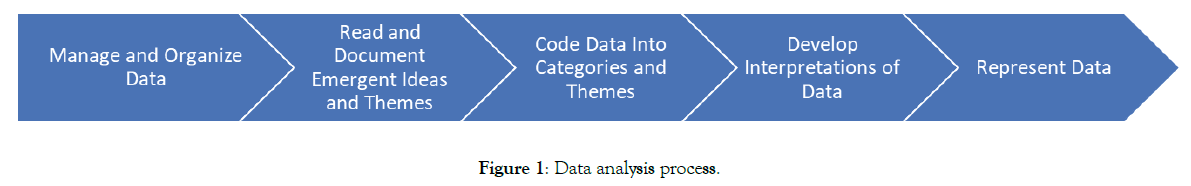

The data analysis was performed on all transcribed interviews by following the approach outlined by Creswell and Poth [15]. The approach consisted of five primary steps; manage and organize the data, read and document emergent ideas or themes, code the data into categories and themes, develop interpretations of the data, and represent the data (Figure 1).

Figure 1: Data analysis process.

The data was organized in files and a file naming convention established to ensure proper organization and easy retrieval. The digital recordings were transcribed into words. The documents were stored on the computer and in hard copy files as required for review and analysis. These files will be retained for three years from the completion of the study, and then will be destroyed. The data was reviewed by reading multiple times, then analyzed, and notes and ideas written in the margins of the paper to document additional thoughts. Emergent themes were documented in the margins for future reference and to start to build the analytical framework. The notes were then summarized to present a shortened version of the transcribed data.

The data was coded and categorized to further understand and visualize themes from the interviews. Common codes and categories were utilized to build the analytical framework that was used to present an overall analysis of the interviews.

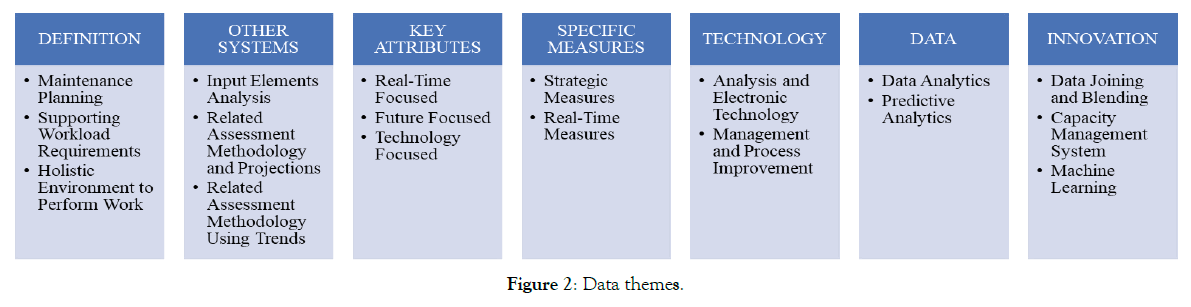

The coded data and categorized data were assembled into charts to display the data to better visualize themes and families of data. This visualization enabled theories to emerge. The data presents a point of view and displays the data in a manner that illustrates theories. This data, analysis, and resulting summaries, models, and theories answer the research question posed, as they are all outputs of the interviews that provide a holistic view from all of the interviews performed (Figure 2).

Figure 2: Data themes.

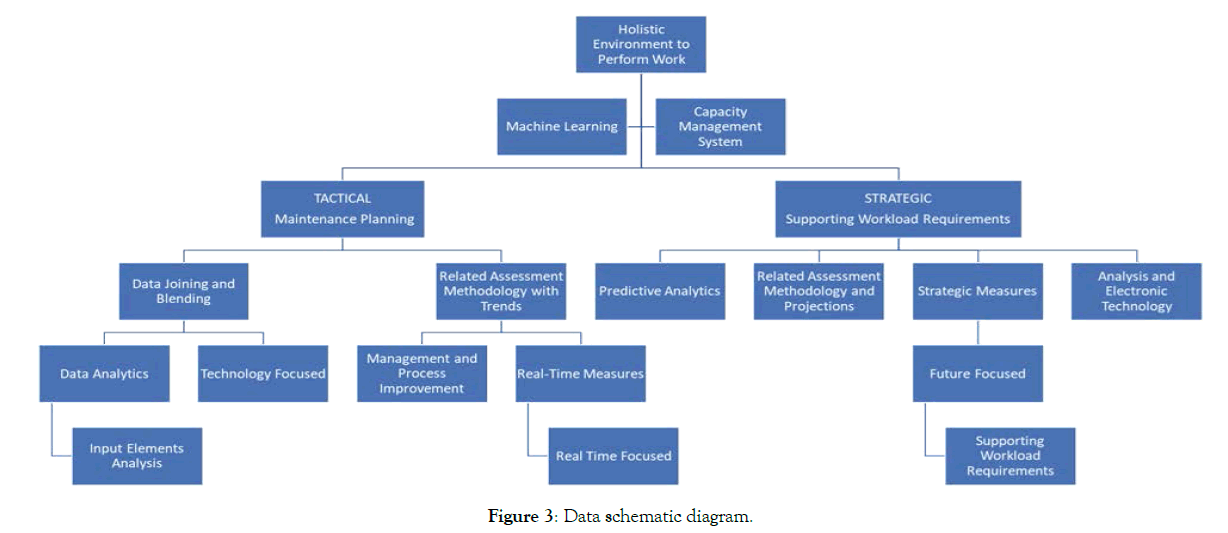

The question set results were then further combined into arrays and a schematic diagram that acts as a heuristic device to help build an accumulating approach to holistic results. Interestingly, the question order generally resulted in an increasing order that had two primary branches, or pillars supporting the overall approach. The two branches could be classified as tactical and strategic efforts. The tactical approach utilizes measures like maintenance planning and various data streams on real time elements like current costs and uptime that then receive independent data analysis, or sometimes blended and joined to further perform data analysis to yield results. The strategic, and future focused approach utilizes workload projections and various related data sources that are blended and joined, resulting in a predictive analytics approach to project future requirements and planning.

These two efforts end with two separate categories of efforts that may be able to be further linked to yield higher level results like capacity analysis utilizing the real time information linked to strategic requirements, or leverage things like machine learning or perform higher level analysis by linking other data sets and providing feedback to the real time systems based on the higher level analysis.

Ethical considerations

The research was conducted in accordance with IRB standards, and with permission from Sullivan University’s IRB, and with approval from NSWC Crane’s leadership. Interviews only commenced after the participant had full understanding of the research, as described in the data collection section. In addition, the participant was informed that they were free to discontinue the interview at any time. The participant was asked to sign the consent form, and once signed, the interview was scheduled and commenced. Finally, when the audio recording commenced, the participant was asked if they understand the process, signed the consent form, and agreed to continue with the survey to document those aspects with the participant.

Reducing coding problems

Triangulation was utilized to compare data from various sources to validate the data. This methodology ensured consistency of the data from multiple sources, allowing further review of the outliers as necessary. Member checking was utilized to ensure the findings are in alignment with the data gathered from the participants. This was accomplished by asking the participants to review the findings and final documents to ensure their interviews are recorded accurately and in alignment with the conclusions. Peer review was the final step to ensure that the conclusions are understandable and defendable. This was accomplished by asking one of my peers and the lead researcher to review the final work and provide feedback on content and format.

Timeline

The study took place between March 25, 2019 and June 10, 2019. The study began with coordination through NSWC Crane leadership, and an invitation to the extended leadership for participation in the study. Once the study was communicated to participants and the proper consents obtained, the interviews began within two weeks. Upon completion of interviews, the data analysis took place over the next four weeks. Once the data was obtained and analyzed, the final report took place in the last three weeks.

Limitations of the study

This study focused solely on defining readiness for Navy laboratories and did not intend to extend to other government entities or the private sector. It was also limited to review of like readiness systems that could be applied to Navy laboratories, their attributes and measures, and not all systems and methodologies that may exist. The study was further limited to technologies and data management efforts that are related to those like readiness systems, and not all technologies and data management efforts available on the entire market today. Additionally, as a pilot study, only four interviews were conducted.

Areas of future research

This study is focused on infrastructure readiness systems for Navy laboratories, and future study could be extended to other defense or government laboratories, and even private industry. The related area of equipment readiness should be reviewed for benefit also. Various other aspects of Navy laboratories could be considered, such as innovative processes and systems, human resources, cultures, and supply chains. The availability of toolsets to fill any gaps identified should also be considered for future research.

The purpose of this proposed qualitative grounded theory study was to examine the definition of infrastructure readiness in terms of Navy laboratories, and to define the best criteria or approach for effectively managing said readiness to meet changing technical mission requirements. This was accomplished via face to face interviews with participants that meet primary criterion sampling requirements of over 15 years of experience at NSWC Crane. The interviews were transcribed and the words coded and categorized to preform analysis on the data. The theme from the data was utilized to develop models and conclusions to answer the questions and pose theories to the research question. Results indicate that infrastructure management is much broader and more dynamic than the current systems are capable of managing and should be broadened and improved to cover existing gaps.

A total of four interviews were completed with four individual participants. Each participant was asked the series of questions and the interviews audio recorded for accuracy purposes. The audio recordings were transcribed and the data coded into categories and analyzed.

The data revealed that infrastructure management is viewed holistically as an environment to perform work. Participants viewed infrastructure readiness as the ability of a space to support work, even if some of the resourcing is technically outside of the definition of infrastructure. This definition included space, cubicles, lab equipment, general equipment, resources, people, unique buildings, and processes. One participant stated:

“When I think of infrastructure readiness it's that the customers of the infrastructure providers have access to processes and have the resources to be able to get the type of environment that they need to be able to be productive to deliver those products and to deliver those services. And it might be cubicles, a desk, lab equipment, or access to power utilities. It's that they are able to have a well-resourced environment appropriate for the type of work that they're doing.”

Another participant stated:

“I think about that (infrastructure readiness) in the very broad sense is about making sure that we have laboratory and infrastructure space ready for us to do the technical work that we need to do. That includes what individuals need when they're in those spaces to operate appropriately. I really don't think about it, even though it's a part of it, the equipment piece by definition. That's all a part of it having it all ready for operational availability.”

These definitions and expectations are not in alignment with the current approach utilized by existing Navy laboratory infrastructure management systems. The current systems are focused on the condition and configuration of facility component level items, and only scratches the surface at creating an environment ready to perform work and operational availability, and certainly does not account for, nor attempt to manage the multitude of items identified during the research such as equipment, cubicles, laboratory equipment, people, or processes.

The data also revealed that in addition to being a broad area not covered by the existing infrastructure management systems, two distinct categories of management emerged. One was focused tactically on real-time management of systems, and required the collection of multiple sources of data and data joining and blending to perform trending and data analysis. The second category was a strategic approach that utilized future focused workload requirements to develop strategic targets in a methodical approach to capacity management.

The tactical management of systems was focused on maintenance of existing facilities and scheduled maintenance and was focused on the operational availability of space which often measures the ability of dynamic equipment to meet specific requirements. This could include set points for temperature and humidity for HVAC equipment, or other environmental conditions for specific laboratory equipment. These measures for operational availability or environmental conditions are not utilized in the current Navy infrastructure readiness systems, which creates a gap in true measurement of infrastructure readiness. The basis is that you are measuring uptime, utilities loading, meantime between failures, and risk of system failures that impact readiness. This gap was identified through interviews with participants, one of which stated:

“My background is the heaviest in air work load and there are definitely meantime between failure systems that are ingrained in platforms that are out there in the Navy that take a platform and then dive into the systems that are on that platform. Then they track those systems that are on the platform and a tremendous amount of data on, what kind of failures are we seeing and what's the frequency that all boils up to how the readiness of whatever platform it is that you're looking at.”

The strategic, and future focused approach utilizes workload projections and various related data sources that are blended and joined, resulting in a predictive analytics approach to project future requirements and planning. This was a key observation, as the current Navy infrastructure management system has a methodology for comparing requirements to existing assets; however, there is no consistent methodology or approach to generating projected requirements for proper planning (Figure 3).

Figure 3: Data schematic diagram.

The recommendations from this study from a practitioner perspective are for the Navy to consider infrastructure management from a broader perspective as a holistic environment to perform work rather than a building component condition management system and to develop two new distinct methodologies for tactical and strategic management efforts. The recommendations for the tactical applications are to develop systems capable of providing real-time data and combining with data from multiple systems for data joining and blending efforts that can be utilized for trend analysis and maintenance planning. This data rich environment would then be able to create a platform to transition to higher level analysis and machine learning over time. The recommendations for strategic efforts should focus on developing a methodology that collects workload requirements and uses predictive analytics and projections to develop strategic measures and future workload requirements. This, in effect, would be a capacity analysis system that would focus on meeting future workload growth and demands. The recommendation from this study from a scholar perspective is to consider readiness management systems as a systematic process that determines the holistic requirements from the operational requirements as the system rather than the sum of the individual physical components.

Citation: Summers T (2019) Considerations on United States Navy Infrastructure Readiness. J Def Manag. 9:181. doi: 10.35248/2167-0374.19.181

Received: 06-Jun-2019 Accepted: 21-Jun-2019 Published: 28-Jun-2019 , DOI: 10.35248/2167-0374.19.9.181

Copyright: © 2019 Summers T. This is an open access article distributed under the term of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.