Journal of Clinical & Experimental Dermatology Research

Open Access

ISSN: 2155-9554

ISSN: 2155-9554

Review - (2023)Volume 14, Issue 5

Melanoma is the most serious type of skin cancer cell in the world. As per the American Cancer Society, there are 99,780 new melanoma patients diagnosed in 2022 of which 7,650 are fatal. Melanoma can develop anywhere on the body and is often a mole that changes size, shape or color. Melanoma shows color variations and an irregular border which are melanoma warning signs. Early diagnosis of the melanoma potentially increasing the chances of cure before cancer spreads. Recent development in Artificial Intelligence (AI) has wide applications in healthcare. Machine Learning (ML) is a subfield of AI has significant development in computer vision, through which statistical models and algorithms can be formed and continuously learn from data to improve perform of a desired task. However, there are possibilities for error and it depend on a lot of factors: E.g. number of samples, quality of the pictures, computer environment, and the machine learning may impact the algorithms. While, in the deep learning approach the knowledge is developed based on sample picture, there are rule based traditional approach which has a business logic built in the approach. Rules could be based on test results, skin colors, etc. This paper presents a comprehensive hybrid approach combining the machine learning methodology along with the color pigment analysis to improve the accuracy and a discussion on how it can be implemented in the field of melanoma diagnosis. We reviewed the latest research and key discoveries in computer vision machine learning, limitations, color pigment-based melanoma detection.

Melanoma, Skin cancer, Diagnostics accuracy, Hybrid approach, Artificial Intelligence

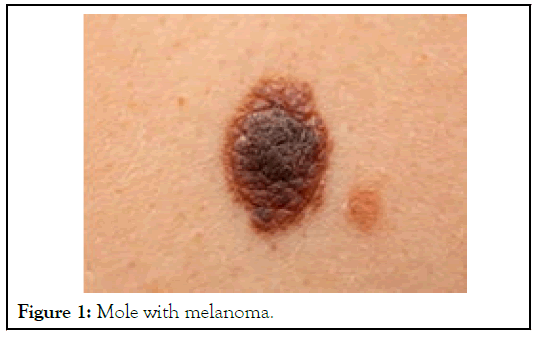

Skin cancer is the abnormal cell growth in the epidermis, the outermost skin layer, caused by unrepaired DNA damage that triggers mutations. These mutations lead the skin cells to multiply rapidly and form malignant tumors. There are three major type of skin cancer-Basal Cell Carcinoma (BCC), Squamous Cell Carcinoma (SCC), and melanoma. The two main causes of skin cancer are the sun’s harmful Ultraviolet (UV) rays and the use of UV tanning beds. Melanoma is the most dangerous of the three most common forms of skin cancer. Melanoma develops from melanocytes, the skin cells that produce melanin pigment, which gives color to skin. Melanoma can develop anywhere on the body, in otherwise normal skin cells or in an existing mole that becomes cancerous. Sample picture is shown in Figure 1. Melanoma most often appears on the face or the trunk of affected men. In women, this type of cancer most often develops on the lower legs. In both men and women, melanoma can occur on skin that hasn't been exposed to the sun. Melanoma can affect people of any skin cell tone. In people with darker skin cell tones, melanoma tends to occur on the palms or soles, or under the fingernails or toenails. Melanoma signs include: 1/large brownish spot with darker speckles, 2/mole that changes in color, size or feel or that bleeds, 3/small lesion with an irregular border and portions that appear red, pink, white, blue or blue-black, 4/painful lesion that itches or burns.

Figure 1: Mole with melanoma.

In 2022, an estimated 197,700 new cases of melanoma are expected to occur in the U.S. Of those, 97,920 cases are in situ (non-invasive), confined to the epidermis (the top layer of skin cell), and 99,780 cases invasive, penetrating the epidermis into the skin’s second layer (the dermis). In 2022, melanoma is projected to cause about 7,650 deaths. Melanomas can be curable when caught and treated early.

Machine learning in melanoma diagnostics

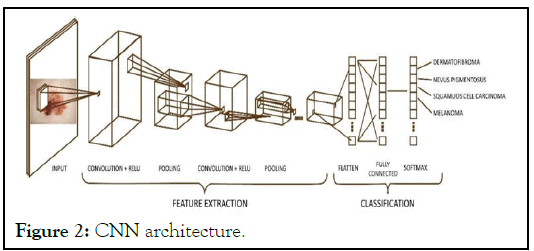

Das, et al. [1], AI aid in the early detection of melanoma, lowering the burden of morbidity and mortality associated with the disease [2]. In AI-based systems a ML model is developed based on supervised, semi-supervised, or unsupervised process where computer systems learn from their experiences without having to be explicitly programmed. In supervised approach the ML model is ingested with datasets of problems and answers. Through trial and error, the model learns to associate the correct response. In unsupervised approach the AI system analyze ingested data without any predetermined solution. Semisupervised learning is a method that uses both labeled and unlabeled data by Du-Harpur, et al. [3]. Deep learning is a category of ML that process data in multiple layers, called deep neural networks, each of which can recognize and learn distinct features particular to the dataset [4]. Convolutional Neural Network is a deep, feed-forward network model that is most typically used to analyze visual images. It has three layers, namely input, hidden, and output, where data goes in via the input layer, crosses the hidden layer, and comes out via the output nodes. Based on the complexity the hidden layers may have multiple computational layers [5,6]. It is made up of convolutional as well as pooling layers that allow the network to encode picture characteristics [7,8]. The Architecture of Convolutional Neural Network (CNN) is a development of the Multilayer Perceptron (MLP) which is designed to process twodimensional data. CNN has architecture as like as neural networks in general, neurons in CNN have a weight, bias, and activation function. CNN architecture as shown in Figure 2, which consists of the convolution layer with Rectified Linear Unit (ReLU) activation, pooling layer as feature extraction layer, and fully connected layer with softmax activation as classification layer.

Figure 2: CNN architecture.

Convolution layer is the first layer that will process the image as an input system model. The image will be convoluted with a filter to extract features from the input image that is called the feature map. ReLU (Rectified Linear Unit) is an activation layer in CNN to increase the training stage on neural networks. ReLU activation makes all pixel values to be zero when a pixel image has a value of less than zero [9]. Polling layers will be inserted regularly after several convolution layers, which can progressively reduce the size of the output volume on the feature map [10]. The Fully-Connected Layer is the last layer that connects all the neurons of the previous activation layer. In this stage, all neurons in the input layer need to be transformed into one-dimensional data (flatten process) [11]. AI has the potential to exceed humans, due to its endless processing power and storage capacity [12]. By September 2018, the US Food and Drug Administration (FDA) had authorized AI approaches for clinical usage, including devices to detect skin cancer cell from clinical photos obtained via a smartphone app [13].

Limitations in machine learning based diagnostics

Babic, et al. [14], seen the big difference between machine learning and the digital technologies that preceded it is that machine learning will independently make complex decisions and continuously adapt itself as it analyzes new data, whereas the traditional digital technologies are mostly for recording, reporting and data analysis. However, machine learning models don’t always work. They don’t always make ethical or accurate choices. As per HBR.org “when-machine-learning-goes-off-therails” there are three fundamental reasons for this. One is simply that the algorithms typically rely on the probability that someone will have a disease. The likelihood of errors depends on a lot of factors, including the amount and quality of the data used to train the algorithms, the specific type of machinelearning method chosen, and whether the system uses only explainable algorithms (meaning humans can describe how they arrived at their decisions), which may not allow it to maximize accuracy.

Second is the environment in which machine learning operates may itself evolve or differ from what the algorithms were developed to face. While this can happen in many ways, two of the most frequent are concept drift and covariate shift. With the former the relationship between the inputs the system uses and its outputs isn’t stable over time and the relationship between, say, the color of someone’s skin (which may vary with race or sun exposure) and the diagnosis decision hasn’t been adequately captured. Covariate shifts occur when the data fed into an algorithm during its use differs from the data that trained it.

Second is the environment in which machine learning operates may itself evolve or differ from what the algorithms were developed to face. While this can happen in many ways, two of the most frequent are concept drift and covariate shift. With the former the relationship between the inputs the system uses and its outputs isn’t stable over time and the relationship between, say, the color of someone’s skin (which may vary with race or sun exposure) and the diagnosis decision hasn’t been adequately captured. Covariate shifts occur when the data fed into an algorithm during its use differs from the data that trained it.

Third is to do with the complexity of the overall systems it’s embedded in. Consider a device used to diagnose a disease on the basis of images that doctors input such as IDx-DR, which identifies eye disorders like diabetic retinopathy and macular edema. The quality of any diagnosis depends on how clear the images provided are, the specific algorithm used by the device, the data that algorithm was trained with, whether the doctor inputting the images received appropriate instruction, and so on. With so many parameters, it’s difficult to assess whether and why such a device may have made a mistake, let alone be certain about its behavior.

In a recent study, Das, et al. [1], and Jutzi, et al. [2] with his colleagues conducted a survey-based study to understand the views of the patients in Germany towards AI in the diagnosis of melanoma. In this study involving 298 participants, 154 (51.7%) had a diagnosis of melanoma. Interestingly, most of the respondents (94%) supported the use of AI in healthcare. This is a very encouraging finding, as the acceptance of the patient plays an important role in the success of any healthcare related decisions. A study by Oh, et al. [12], reported good familiarity of AI by only 5.9% of physicians out of 669 who completed the questionnaire-based study. Acceptance of ML in skin cancer cell diagnosis by dermatologists is still very low. Oh et al feel that if increased accuracy and early detection is possible with ML, it may be well accepted by the clinicians. AI will not replace clinicians, but rather assist them in better evaluation and hence management of patients.

Color pigment-based melanoma diagnostics

Human skin color tones fall within a small gamut of the complete spectrum of colors. It’s difficult to describe skin cell tones in verbal expression. However, color can be expressed in numerical entity. These values re useful in comparing tones for medical research. Commission Internationale de I Eclairage (CIE) L*a*b is the international standard for numerical representation of color. Skin color varies among varies sites within the body. Even among twins there are color differences. Skin color of gluteal region represents constructive color whereas cheek color represents facultative color. Exposed area like cheek has increase a* value because of vascularization. Epidermal melanin melanosome size influences the L* value. a* indicates erythema while b* value indicate taming and the transition from erythema to taming can be measured objectively. Color values of newborn can give information about their health status. A color value of aged person is useful to know about their nutritional status. Comparing the color of ulcers over a period of time indicates their healing status. There was a 5 m mol/L increase in 25 Hydroxy Vitamin D levels for every 10-degree lower skin cell value in the forearm indicating that forearm color can be a reliable indicator for Vitamin D status. There is a 8.2% increase in skin cell reflectance values for every 10 degree increase in latitude in the Northern hemisphere in males whereas only 3.3% increase was noted for the latitude in the Southern hemisphere indicating the skin color is darker in Southern hemisphere for the corresponding latitude. Minimal Erythema Dose (MED) is defined as an increase in a* value by 2.5 units. Significant risk for melanoma was found with increasing a* values in Italian patients. Objective assessment of erythema was assessed in 22 psoriatic patients and it was observed that L*a*b values of erythematous plaques were useful in determination of Psoriasis Area and Severity Index (PASI) scores. Fitzpatrick skin types correlated with the MED values. There was significant relationship between the L* values and MED values, but this did not apply for the a* and b* values. The MED value of Narrow Band Ultraviolet B (NBUVB) rays has become a basic criterion for the phototherapy protocol. Hence skin type and L* values are useful for predicting the response to NBUVB therapy. Establishing a rules-based algorithm for the a*, b*, L* along with the geographical location and monitoring the variation over a period of time can indicate the melanoma precisely.

Hybrid approach

Machine learning is taking the world by storm, and many companies that use rules engines for making business decisions are starting to leverage it [15]. However, the two technologies are geared towards different problems. Rules engines are used to execute discrete logic that needs to have 100% precision. Machine learning on the other hand, is focused on taking a number of inputs and trying to predict an outcome. It’s important to understand the strengths of both technologies and identify the right solution for the problem. In some cases, it’s not one or the other, but how you can use both together to get maximum value. We can have ML models use various features to come to a conclusion which is then used as one of the inputs to a rule-based system [16-18]. This again works by splicing a complicated problem into two parts-the more intelligent/ complex part uses ML to process complex data patterns. Once the data is reduced to a simple conclusion, the rule-based system is used to make accurate decision. Figure 3 explains the logical flow for accurate decision making.

Figure 3: Hybrid approach flow.

With the development of science and technology, the diagnosis accuracy and efficiency for skin cancer cells classification are constantly improving. In the AI based clinical diagnosis scenarios of skin cancer cell, the final diagnosis often depends on the number of samples, image quality and the devices used in the diagnostics, which is highly subjective and has a high rate of misdiagnosis. Recently, with the success of deep learning in medical image analysis, several researchers have applied deep learning methods for skin cancer cell classification in an end manner, however, ability to accurately diagnostic melanoma need rules-based hybrid approach that leverages colors and its changes.

In this study, we present a comprehensive overview of the most recent breakthroughs in deep learning algorithms and in skin color pigment-based diagnostics for skin cancer cell classification. Firstly, we introduced different types of skin cancer cell, diagnosis using deep learning-based approaches, limitations that limit the diagnostics accuracy. Next, we present the applications of color, its changes over a period of time in skin cancer. Finally, we provide a hybrid approach that combined deep learning and rules-based approach to improve the diagnostics accuracy. In comparison to other comparable reviews, this paper presents a unique hybrid approach in the topic of skin cancer cell diagnostics by combining the best of bread from the contemporary deep learning applications and traditional color-based approach.

I am grateful to all Dr. Srivenkateswaran Kothandapany, MD in Dermatology, Dr. S. Shreyaa Vanju, MD in Dermatology for their extensive personal and professional guidance and taught me a great deal about melanoma, its diagnostics and advancements in scientific research.

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

Citation: Senthil V, Kothandapany S (2023) Deep Learning and Rule-Based Hybrid Approach to Improve the Accuracy of Early Detection of Skin Cancer. J Clin Exp Dermatol Res. 14:646.

Received: 31-Jul-2023, Manuscript No. JCEDR-23-25296; Editor assigned: 04-Aug-2023, Pre QC No. JCEDR-23-25296 (PQ); Reviewed: 18-Aug-2023, QC No. JCEDR-23-25296 ; Revised: 25-Aug-2023, Manuscript No. JCEDR-23-25296 (R); Published: 01-Sep-2023 , DOI: 10.35248/2168-9296.23.12.446

Copyright: © 2023 Senthil V. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution and reproduction in any medium, provided the original author and source are credited.