Journal of Information Technology & Software Engineering

Open Access

ISSN: 2165- 7866

ISSN: 2165- 7866

Research Article - (2022)Volume 12, Issue 4

Automated Teller Machine (ATM) is now used for transactions by different users in day to day life due to its high convenience. With the help of ATM’s, banking is now easier. But there have been a lot of frauds that are occurring in ATM’s. Thus there is a need to provide more security to these ATM’s. The proposed system provides the real-time detection and real-world encounter through Haar Cascade & CNN, an analytical service. The software starts by taking pictures of everyone and keeps the details in a database. The proposed activity works with the default detection system. The method consists of three stages, the first is facial detection from the image, and the second is obtaining all the facial details for the purpose of expression recognition to detect liveness. The most useful features that differ from the camera image are extracted from the feature extraction section finding out if all the face details are visible. This feature vector creates an active face representation. In the third step our feature background is designed to find out what an osculated face looks like

CNN; Expression recognition; Face detection; Haar cascade; liveness

The advent of technology brings a wide range of tools that desire the greatest happiness of customers. An ATM is a machine that makes money transactions less efficient for customers. But it has both advantages and disadvantages. Current ATMs use no more than an access card and PIN for different authentication. This is at an ATM Using the Face Recognition System which shows the way to many fraudulent attempts and misconduct for card theft, PIN theft, theft and hacking of customer account information and other security features.

Exploring the camera module based on visual performance comparisons depends on the similarities between the features extracted from the image regions and those from the image in question. Face recognition system is a system that automatically identifies a person from a digital photo source. One of the best ways to do this is by comparing selected facial features from a face and photo database.

Providing a secure ATM System for face recognition and bringing brightness, computer vision lessons in human face recognition [1].

Face recognition program is a computer program that automatically detects or verifies a person from a digital photo.

As there is a lot of deception and misuse of ATM Card so build a secure face-biometric authentication system, which will be available via live server and will be protected. The proposed system will assist in many ways in the banking sector.

We can do tracking tasks:

• Finding a valid Account holder using the CNN face algorithm.

• Assessing user performance with the help of expression recognition.

• Creating an automated, easy-to-use and complete automated system with high accuracy.

• Reduce the amount of fraud in the ATM system by providing more security features such as real-time face recognition.

• Identify all instances of objects from a known category, such as people or faces in a photo.

The face detector can calculate the areas of the eyes, nose and mouth, in addition to the binding box of the face.

An easy way to comply with the conference paper formatting requirements is to use this document as a template and simply type your text into it (Figure 1).

Figure 1: System block diagram.

Proposed System

The proposed block diagram is described as follows:

1) Face Image:

The Camera installed in the ATM Machine will take the face image of the user and will store that image in the database.

2) Pre-Processing:

After taking the face image of the user, that face image undergoes pre-processing in which the improvement of the image data takes place and all the noises or distortions present in that image gets removed and enhances some features of image which can be essential for further process.

3) Segmentation:

After pre-processing, the image undergoes segmentation process in which the pre-processed face image of the user gets partitioned into multiple segments such as the set of pixels to simplify or to change the representation of an image into something which will be easy to analyze further.

4) Feature Extraction:

In Feature extraction process, the number of features in a dataset gets reduced by creating new features from the existing ones which contain more information and which will be essential for further processes.

5) Classification:

i) Training the algorithm

• The algorithm or the face data will be trained based on the number of datasets any image has such as intensity of the images, pixels, etc.

• Also when different inputs get feeded in database multiple times, that face data or the algorithm gets trained.

• Classification of the face data takes place with the help of expression recognition. In expression recognition, the face image of the user gets classified in different categories such as neutral, happy, sad and angry.

• The algorithm gets trained such that when a person smiling in front of camera detects it and shows a message as “Happy” on the screen next to his/her face.

• Similarly, when the person shows different expressions such as neutral (Neither happy nor sad), sad and angry, the system displays the same message as his/her expressions next to his/her face.

ii) Applying the CNN operation

CNN is a deep learning algorithm which can takes a image as an input, assign importance to various aspects of that image and be able to differentiate one from another.

iii) Extracting the histograms:

• Histogram of an image refers to the number of pixels in an image at different intensity value.

• It can provide information on the degree of variation of the data and show the distribution pattern of the data by bar graphing the number of units in each class or category. A histogram takes continuous (measured) data like temperature, time, and weight, for example, and displays its distribution.

Haar cascade algorithm

The discovery of a human face played an important role in the interaction of the human machine and in the use of computer vision. Like personal identity information, a person’s face has the advantages of diversity and non-response. However, because of such large differences as distinct light, speeches and backgrounds and other uncertainties, the discovery of a human face remains a challenging problem in real-world systems. In 2002, Ojala used the Local Binary Pattern (LBP) to classify graphics [2]. LBP is a non-invasive non-invasive tissue operator with strong discrimination and is also used in obtaining human face and identity. However, although the features of LBP have great discriminatory power, they miss the local landscape under certain circumstances. In 2004, Viola developed a cascade classifier algorithm and used features such as Haar to detect human face detection. Since then, many researchers have worked on the site in terms of Haar or its modification and have made significant progress.

Associated Steps:

• The first sections with a simple and small number of features have removed many windows that do not have facial features, thus reducing the false standard, while the later sections that have problems with the number of features can focus on lowering the error rate, hence the lower false rating.

• It is therefore how the acquisition of features takes place in stages. You can see that, when a window is in a faceless area, only the first section has two rectangular features, and as they discard the window before the start of the second phase. Only one window contains the face, which runs through both stages and finds the face.

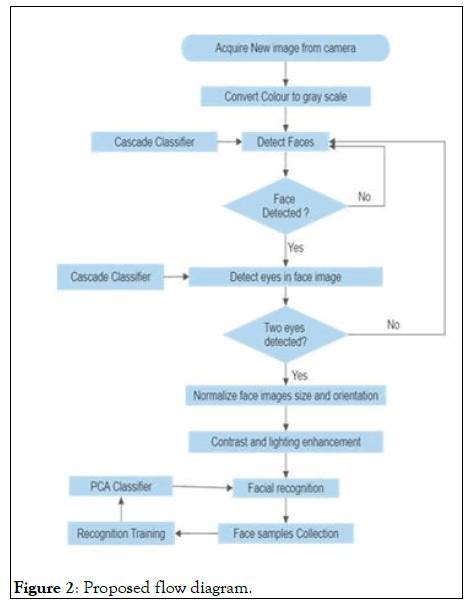

• Features are applied to images in categories. The sections initially contain simple features, in comparison with the features of the next section which are complex, complex enough to get the details of the facial art. If the first stage will not find anything in the window, then discard the window itself in the remaining process, then move on to the next window. In this way more processing time will be saved, as idle windows will not be processed in most stages (Figure 2).

Figure 2: Proposed flow diagram.

• The processing of the second phase will begin, only when the features of the first phase in the image are found. The process goes like this, for example, if one phase passes, the window is moved to the next stage, if it fails the window is discarded.

CNN Classifier: Depending on the algorithms, there are sharing parameters between the convolution layer and the CNN convolution layer. The advantage of this is that the memory requirements are reduced, and the number of parameters to be trained is similarly reduced. The performance of the algorithm is therefore improved. At the same time, in some machine learning algorithms, images require us to perform processing or extracting a feature. However, we rarely need to perform these functions when using CNN image processing. This is something that other machine learning algorithms can do.

There is also a lack of in-depth reading. One of them is that it requires multiple samples to build a depth model, which restricts the use of this algorithm. Today, excellent results have been found in the field of face recognition and letter recognition, so this article will do a simple study of CNN- based face recognition technology. Implementation can be broadly divided into four phases: the facial positioning phase, the facial recognition phase, the feature removal feature and the sensory separation phase.

• Convolution layer

This layer removes features from the image used as input. Neurons strengthen the input image and produce a feature map in the output image and this output image from this layer is fed as input to the next resolution layers.

• Pooling layer

This layer is used to reduce the size of the feature map that stores all the important features. This layer is usually placed between two layers of specification.

• ReLu Background

ReLu is a non-linear function that replaces all the negative values on the feature map to zero. It's a smart thing to do.

• Fully connected layer

FLC means that each filter in the previous layer is connected to each filter in the next layer. This is used to separate the input image based on the training database in different categories.

It has four stages:

• Model construction

• Model training

• Model testing

• Model evaluation

The design of the model depends on the machine learning skills. In this case of projects, it was Convolutional Neural Networks. After modeling it is time for model training. Here, the model is trained using training data and the expected release of this data. Once the model has been trained it is possible to do a model test. At this stage a second set of data is being loaded. This data set has never been seen by a model so only the exact accuracy will be guaranteed. After the model training is completed, the stored model can be used in the real world. The name of this section is to test the model [3,4].

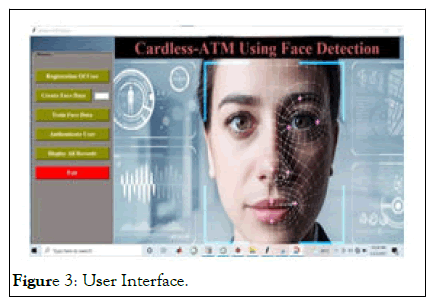

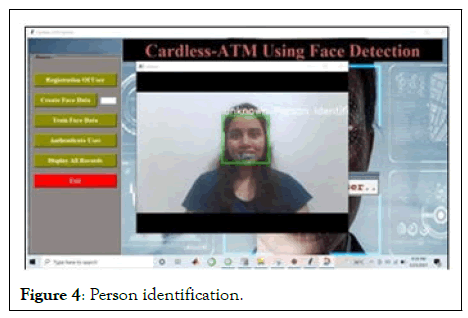

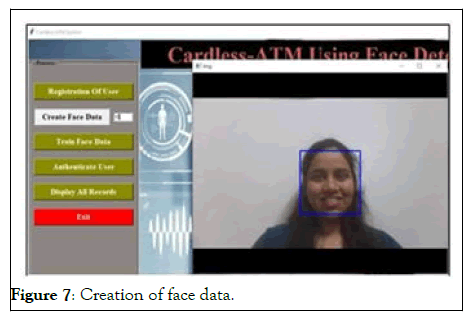

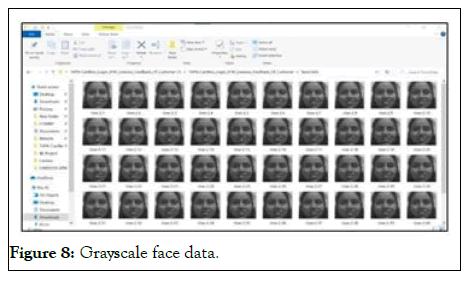

Following results represent the entire cardless ATM system. The display we designed for the ATM system consists of six options. So, whenever a user goes to ATM the display will show these options. Our work can be divided into 4 categories. We discuss it in detail in this chapter (Figures 3-8).

Figure 3: User Interface.

Figure 4: Person identification.

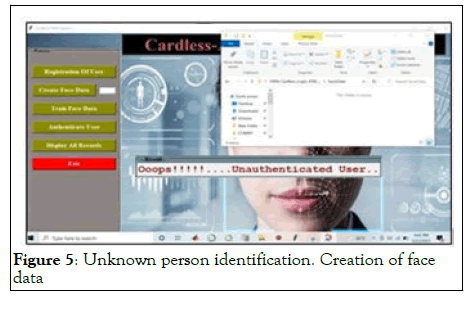

Figure 5: Unknown person identification. Creation of face data

Figure 6: Training of face data.

Figure 7: Creation of face data.

Figure 8: Grayscale face data.

User before registration

If the user before registration tries to authenticate him or herself will not be given authentication and the error message will display as “Oops!!!!...Unauthenticated User.”

• Training the Face

When the user click on the Create Face Data button, the camera will turn on and it will take the images of the user and store in database.

• Face image Database

• Successful Authentication

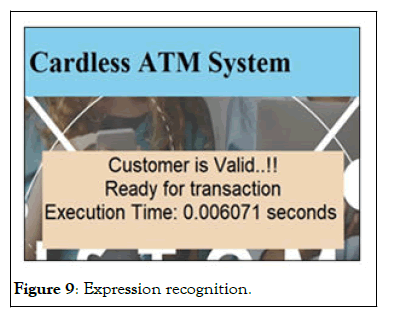

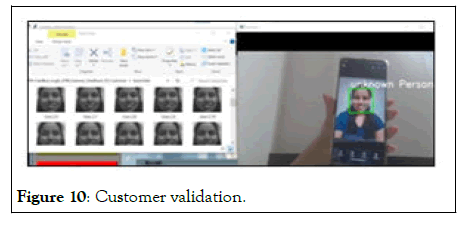

After clicking on Start Authentication button, camera in the system will be turned on and it will show some information about the user like number of faces and expression of user to detect whether the live user is present in front of camera or not (Figures 9-12).

Figure 9: Expression recognition.

Figure 10: Customer validation.

Figure 11: Registered User’s Photo Image Authentication

Figure 12: Customer’s non-validation after showing Nonliving image

• Non Living image Authentication :

In the third category, sometimes it may happen that people show image/photo of a registered person to the camera and try to do transaction. For that we used facial expression recognition using CNN algorithm. It checks the liveness of a person. If the person is not live then it will show that the person is unknown.

As the Unknown person is identified the system shows a message seen on the display as “unauthenticated user”. Hence, there is no chance of a fraud or any illegal activity [5].

In this project we have implemented a system based on face recognition. In which we are going to provide secure way for successful money transaction.

We have used two algorithms to develop this system that are CNN and Haar Casacde Algorithms which will give more accuracy to our system and will help us getting better results. We are developing and training the algorithms so that it will capture real time pictures and successfully compare it with registered user data base. On successful comparison it will lead to money transaction. These systems will definitely help us in reducing the fraud rate related to money transaction.

Citation: Bajaj S, Dwada S, Jadhav P, Shirude R (2022) Card less ATM Using Deep Learning and Facial Recognition Features. J Inform Tech Softw Eng. 12:302

Received: 12-Sep-2022, Manuscript No. JITSE-21-11077; Editor assigned: 14-Sep-2022, Pre QC No. JITSE-21-11077 (PQ); Reviewed: 28-Sep-2022, QC No. JITSE-21-11077; Revised: 07-Oct-2022, Manuscript No. JITSE-21-11077 (R); Accepted: 14-Oct-2022 Published: 14-Oct-2022 , DOI: 10.35248/2165-7866.22.12.302

Copyright: © 2022 Bajaj S et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.