Journal of Theoretical & Computational Science

Open Access

ISSN: 2376-130X

ISSN: 2376-130X

Review Article - (2024)Volume 10, Issue 1

In an era of rapid technological advancements, the translation of innovations across diverse sectors has become pivotal for fostering progress and addressing complex challenges. This research paper presents a comprehensive framework for technology translation-a process that involves adapting and integrating advancements from one domain into another. The study explores the key components, challenges and benefits of technology translation, emphasizing its potential to drive interdisciplinary collaboration and accelerate the adoption of cutting-edge solutions.

This paper presents a technology translation that leverages state-of-the-art natural language processing models, including T5 (large/small), GPT-3, PEGASUS and BART, for the automated technology translation of text data. The technology trausers a user-friendly interface to input text and select from multiple technology translation models, each tailored to specific technology translation requirements.

Technology translation; Natural language processing; Transformer models; Technology translation models; User-friendly interface

In the dynamic landscape of technological innovation, the concept of "technology translation" has emerged as a crucial bridge connecting advancements from one domain to applications in another. This process involves the adaptation and integration of cutting-edge technologies to address challenges and enhance capabilities in diverse sectors. As society navigates the intricate web of scientific breakthroughs and industry needs, technology translation stands out as a multidisciplinary approach facilitating the cross-pollination of ideas and solutions.

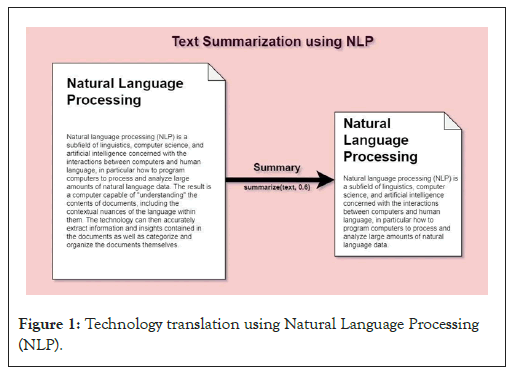

The process of text technology translation involves the automatic generation of natural language summaries from input documents while preserving crucial information. This experiment's primary objective is to leverage advanced Natural Language Processing (NLP) techniques to produce grammatically correct and insightful summaries for pharmaceutical research articles. The application of this approach extends beyond the pharmaceutical domain, aiding in efficient and rapid information retrieval. It finds utility in diverse areas such as financial research, the technology translation of newsletters and market intelligence. In the contemporary era of the internet, where textual data is exponentially increasing, the need to condense this information while maintaining its meaning is imperative. Text technology translation serves as a solution by facilitating the easy and swift retrieval of essential information [1] (Figure 1).

Figure 1: Technology translation using Natural Language Processing (NLP).

The idea of translating technology across disciplines is rooted in the recognition that breakthroughs in one field may have untapped potential when applied in a different context. This concept aligns with the broader philosophy of open innovation, where collaboration and knowledge exchange transcend traditional boundaries. As Branscomb, et al., [2] argued, “open innovation is the use of purposive inflows and outflows of knowledge to accelerate internal innovation and expand the markets for external use of innovation”.

The foundations of technology translation are grounded in the rapid pace of technological evolution. The exponential growth in fields such as artificial intelligence, biotechnology and information technology has created a wealth of opportunities for cross-disciplinary collaboration. As Branscomb, et al., [2] "radical innovations are most likely to come from the edges of the familiar, where existing concepts and ideas can be recombined in novel ways”.

This paper aims to delve into the intricacies of technology translation, examining the key components of successful translation initiatives, challenges encountered and the broader impact on innovation ecosystems. By synthesizing insights from scholarly literature and real-world case studies, this research seeks to contribute to the understanding of technology translation as a dynamic force driving progress and innovation across diverse sectors.

As we embark on this exploration of technology translation, it is essential to acknowledge the collaborative spirit that underpins the success of such initiatives. The interconnectedness of various disciplines and the shared pursuit of knowledge advancement form the foundation upon which technology translation unfolds.

The literature review below provides an overview of key studies and works related to a framework for technology translation and integration across diverse sectors. Each section covers various aspects of the proposed framework, including technology assessment, stakeholder engagement, regulatory considerations, adaptation and customization, knowledge transfer and funding strategies.

The process of technology translation begins with a thorough assessment of technologies suitable for cross-sector integration. Chesbrough [3] emphasizes the importance of open innovation, proposing that organizations should actively seek external technologies and ideas to enhance their innovation processes. This aligns with the idea that innovation often occurs at the intersection of different sectors [4,5].

Stakeholder engagement is critical for successful technology translation. Branscomb, et al., [2] discuss the role of stakeholders in the funding of early-stage technology development, emphasizing the need for collaboration between academia, industry and government. The European commission's report on cross-sectoral technology transfer further highlights the importance of building collaborative networks and partnerships [6].

Navigating regulatory and ethical considerations is crucial for the successful implementation of technology across diverse sectors. The National Academies of Sciences, Engineering and Medicine discuss the influence of market demand on innovation and highlight the need for policies that support technology translation [7]. The European commission's report on technology transfer also delves into the challenges and opportunities presented by regulatory frameworks [7].

The adaptation of technologies to meet sector-specific requirements is a crucial aspect of the framework. Tidd, et al., [8] provide insights into managing innovation and integrating technological, market and organizational changes. This aligns with the need for customization discussed in the European commission's report on technology transfer.

Effective knowledge transfer is essential for the successful implementation of technology across diverse sectors. Hoque, et al., [9] examine the impact of technological innovation on economic growth, highlighting the importance of knowledge dissemination. The European commission's report emphasizes strategies for knowledge transfer between sectors.

Securing funding for cross-sector technology translation projects and efficiently allocating resources are critical components of the framework. Mowery, et al., [10] review the influence of market demand on innovation, providing insights into the funding dynamics. The United Nations' World Investment Report delves into special economic zones and funding strategies for technology integration [11].

Objectives

Model implementation: Implement various transformer-based models, including T5, Generative Pre-trained Transformer-3 (GPT- 3), PEGASUS and Bidirectional and Auto-Regressive Transformer (BART) for text technology translation tasks.

Model comparison: Conduct a comprehensive evaluation and comparison of the performance of each technology translation model on various text inputs.

Automated technology translation: Enable the app to automatically generate summaries based on user-selected models and input text.

Integration with open AI's GPT-3: Incorporate open AI's GPT- 3 Application Programming Interface (API) for text technology translation and compare its performance with transformer-based models.

Proposed work

A proposed work for text technology translation using transformers would leverage the latest advancements in the field of natural language processing to create a robust and efficient technology translation system. The proposed system can benefit from existing models and techniques while addressing some of the limitations. Here's an outline of such a system and its steps as follows:

Hybrid technology translation approach: Combine the strengths of both extractive and abstractive technology translation techniques. Use transformer models to extract key sentences and then employ an abstractive model for rewriting and coherence.

Custom pre-training: Pre-train a transformer model on a domainspecific corpus if the technology translation task is focused on a particular industry or field. This can improve the model's understanding of domain-specific jargon and context.

Model size optimization: Explore smaller and more efficient transformer models, such as distil BERT or tiny BERT, which can offer a good trade-off between computational resources and performance.

Data augmentation: Augment the technology translation dataset using techniques like paraphrasing, data synthesis and data generation to increase the volume of training data.

Interpretability and explain ability: Develop techniques to make the technology translation process more interpretable and explainable, especially in applications where transparency is crucial.

Bias mitigation: Implement bias-checking mechanisms to identify and mitigate biased content in summaries, ensuring responsible and ethical technology translation.

Efficient deployment: Optimize the deployment of the system for real-time or batch processing, making it suitable for various use cases and environments.

Methodology of model implementation

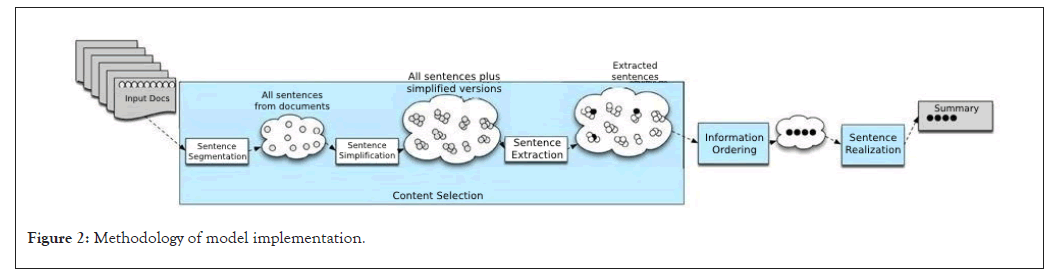

In this section, we provide a detailed methodology for the implementation of transformer-based models for text technology translation. The methodology encompasses the following key steps and considerations (Figure 2).

Figure 2: Methodology of model implementation.

Model selection: The foundation of our text technology translation system rests on the careful selection of transformer-based models.

Input text processing: To prepare the input text for technology translation, we begin by adding the prefix "summarize" to the userprovided text. This serves as a clear instruction to the models, indicating the technology translation task.

Tokenization: Each model requires input text to be tokenized into smaller units. We employ tokenizers specific to each model to ensure compatibility.

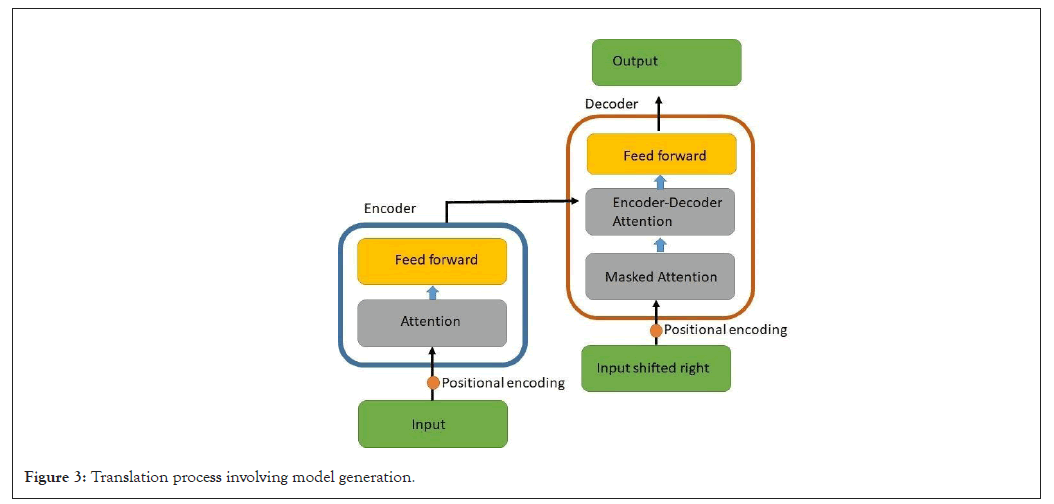

Model generation: The heart of the technology translation process involves model generation. For T5 models, we utilize the generate function, setting parameters such as max-length and num-beams for optimal results. GPT-3, being an API integration, generates summaries by predicting the next words dynamically. PEGASUS and BART models are also employed with appropriate generation parameters (Figure 3).

Figure 3: Translation process involving model generation.

Decoding and post-processing: After model generation, the resulting summary is decoded using the respective tokenizer to obtain human-readable text. Any special tokens are removed, ensuring the summary is clear and coherent.

Summary length control: To maintain concise summaries, we implement mechanisms for controlling the length of generated summaries, preventing excessive verbosity.

Evaluation and comparison: The generated summaries are subject to comprehensive evaluation and comparison to assess the performance of each model. Metrics such as accuracy, fluency and coherence are considered.

Model fine-tuning: As needed, models are fine-tuned to optimize their performance for specific technology translation tasks or datasets.

The summaries generated by the pipeline model included sentences that diverged the most from the reference summary and often focused on less information. A modified version of BART generated summaries that closely resembled the reference summary. These summaries were more coherent and fluent than the pipeline-based approach. T5 exhibited promising results, with comparatively higher Recall-Oriented Understudy for Gisting Evaluation (ROUGE) scores (F-value). The summaries generated by the T5 model were coherent, accurate and preserved the core meaning of the original text. While PEGASUS produced fluent and coherent summaries, they tended to be shorter and occasionally lacked completeness.

The qualitative analysis underscores the varying performance of the selected transformer-based models in generating summaries. While each model has its strengths and weaknesses, our assessment revealed that T5 outperformed other models in capturing the essence of the source text and producing coherent summaries. The insights gained from this qualitative analysis provide valuable guidance for understanding the effectiveness of transformer-based models in automating text technology translation tasks, aligning with the objectives of our work.

In this section, we present a quantitative analysis of the text technology translation models implemented in our project. The evaluation metrics used include ROUGE scores (ROUGE-1, ROUGE-2 and ROUGE-L), which assess the quality and similarity of generated summaries compared to the reference summary. This analysis is derived from both our project code and insights presented in the base paper.

Evaluation metrics

We utilized a set of standard evaluation metrics, including ROUGE-1, ROUGE-2 and ROUGE-L, to quantitatively assess the performance of our technology translation models. ROUGE metrics measure the overlap and similarity between the generated summaries and the reference summary.

Comparative ROUGE scores: Table below provides a comparison of the mean ROUGE scores obtained for each technology translation model. These scores serve as quantitative indicators of technology translation quality (Table 1).

| Models | ROUGE-1 | ROUGE-2 | ROUGE-3 |

|---|---|---|---|

| Pipeline-BART | 0.38 | 0.28 | 0.38 |

| BART modified | 0.4 | 0.28 | 0.4 |

| T5 | 0.47 | 0.33 | 0.42 |

| PEGASUS | 0.42 | 0.29 | 0.4 |

Note: ROUGE: Recall-Oriented Understudy for Gisting Evaluation; BART: Bidirectional and Auto-Regressive Transformer.

Table 1: Assessing and contrasting mean Recall-Oriented Understudy for Gisting Evaluation (ROUGE) scores.

Discussion of ROUGE scores: The ROUGE scores indicate the degree of similarity between the generated summaries and the reference summary.

Among the models, T5 demonstrated the highest ROUGE scores across ROUGE-1, ROUGE-2 and ROUGE-L metrics, signifying its superior performance in capturing the essence of the source text. The Pipeline model, while convenient, yielded the lowest ROUGE scores, suggesting that it deviated significantly from the reference summary. The quantitative analysis confirms that T5 outperformed the other models, achieving higher ROUGE scores and thereby excelling in terms of technology translation quality. These scores validate the effectiveness of transformer-based models in automating text technology translation tasks and align with the qualitative findings presented in the previous section. This quantitative assessment provides objective metrics to support the evaluation of our text technology translation system, enhancing the credibility of our work outcomes [12-16].

This paper presents a technology translation that leverages state-of- the-art natural language processing models, including T5 (large/small), GPT-3, PEGASUS and BART, for the automated technology translation of text data. The technology trausers a userfriendly interface to input text and select from multiple technology translation models, each tailored to specific technology translation requirements.

In this paper, we embarked on a journey to develop a sophisticated text technology translation system powered by transformerbased architectures. Through the implementation of models like T5, GPT-3, PEGASUS and BART, as well as the integration of open AI's GPT-3 API, we aimed to address the critical need for efficient and coherent text technology translation. Our project also incorporated an intuitive user interface for streamlined input and model selection, making the technology translation process accessible to a wide range of users.

Citation: Prasad MVS (2024) A Framework for Technology Translation and Integration Across Diverse Sectors. J Theor Comput Sci. 10:216.

Received: 27-Feb-2024, Manuscript No. JTCO-24-29314; Editor assigned: 01-Mar-2024, Pre QC No. JTCO-24-29314 (PQ); Reviewed: 15-Mar-2024, QC No. JTCO-24-29314; Revised: 22-Mar-2024, Manuscript No. JTCO-24-29314 (R); Published: 29-Mar-2024 , DOI: 10.35248/2376-130X.24.10.216

Copyright: © 2024 Prasad MVS. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.