Journal of Depression and Anxiety

Open Access

ISSN: 2167-1044

ISSN: 2167-1044

Research Article - (2023)Volume 12, Issue 3

Background: Reliable prediction of clinical progression over time can improve the outcomes of depression. Little work has been done integrating various risk factors for depression, to determine the combinations of factors with the greatest utility for identifying which individuals are at the greatest risk.

Materials and methods: This study demonstrates that data-driven Machine Learning (ML) methods such as Random Effects/Expectation Maximization (RE-EM) trees and Mixed Effects Random Forest (MERF) can be applied to reliably identify variables that have the greatest utility for classifying subgroups at greatest risk for depression. 185 young adults completed measures of depression risk, including rumination, worry, negative cognitive styles, cognitive and coping flexibilities and negative life events, along with symptoms of depression. We trained RE-EM trees and MERF algorithms and compared them to traditional Linear Mixed Models (LMMs) predicting depressive symptoms prospectively and concurrently with cross-validation.

Results: Our results indicated that the RE-EM tree and MERF methods model complex interactions, identify subgroups of individuals and predict depression severity comparable to LMM. Further, machine learning models determined that brooding, negative life events, negative cognitive styles, and perceived control were the most relevant predictors of future depression levels.

Conclusion: Random effects machine learning models have the potential for high clinical utility and can be leveraged for interventions to reduce vulnerability to depression.

Regression tree; Machine learning; Random forest; Depression; Mental health

Major Depressive Disorder (MDD) is a prevalent and debilitating disorder and is associated with tremendous personal and societal costs [1]. It is also one of the most common mental disorders among college students [2-4]. Research has indicated that depression in college students is associated with lower academic performance, increased levels of anxiety, alcohol and drug dependency, poorer quality of life and self-harming behaviors [5-7]. Thus, given the high prevalence and burden of MDD, more research is needed to evaluate such risk factors for the development of depressive symptoms. Hypothesis driven studies typically predict only limited variance in depression. To better identify depression, there is a need to improve our models to predict who is at the greatest risk, and when that risk is most likely to increase.

Flexibility is the ability to adapt thoughts and behaviors to meet changes in contextual demands that have been proposed as a group of broadly-related constructs that may serve as protective factors against depression [8-10]. Several approaches to assessing flexibility, or the lack of their inflexibility, have been evaluated as predictors of depressed mood, including coping flexibility, explanatory flexibility, perseverative thinking such as rumination and worry, and cognitive flexibility. Other known risk factors for depression include exposure to negative life events, having a negative cognitive style of responding to negative events, and engaging in maladaptive emotion regulation strategies [11-14]. However, research typically has examined one or two of these constructs in isolation, little work has integrated these risk factors or investigated them comparatively as predictors of depression longitudinally [15]. The goal of this study is to highlight machine learning techniques that can facilitate the identification of risk and protective factors and their interactions, which may reduce vulnerability to developing symptoms of depression in young adults. Although the group of risk factors we consider is not exhaustive, we present this analysis as an example of how machine learning techniques can be used to integrate diverse measures in the evaluation of risk, in ways that may have clinical utility.

When and how might random-effects machine learning modeling have utility for clinical scientists?

Statistical analysis of longitudinal data requires accounting for possible between-subject heterogeneity and within-subject correlation. Linear Mixed effects (LMM) (or multilevel) modeling is one of the approaches that is typically used to analyze longitudinal data accounting for the correlated nature of data [16,17]. However, there are several limitations in traditional mixed effects modeling, including parametric assumption, labor- intensive testing for complex interactions, and overfitting due to a large number of attributes.

Random effects machine learning modeling may be useful in situations common to health science researchers. This is particularly true for heterogeneous disorders such as depression, for which there are several proposed risk or protective factors, the outcome variable is determined by several risk factors and the complexity of the interaction of these factors to determine an individual's likelihood of this outcome behavior [18,19].

Regression trees can identify subgroups of individuals who share common characteristics and produce a user- friendly visual output (which is referred to as a decision tree). This output then can be provided to clinicians to tailor further assessment and intervention. One of the advantages of regression tree methods is that they do not require a hypothesized pattern of association between the predictors and the outcome. Moreover, higher-order interactions, and thus non-linear associations between the predictors and outcome variables, are allowed via the different splits of nodes in the regression tree. From a clinical perspective, this data-driven approach is a clear benefit. Examples of relevance to depression research include the detection of moderators. For example, negative life events are well established as one of the strongest predictors of depression, and a variety of vulnerability factors have been identified that moderate the link between life events and depression, such as negative or inflexible cognitive styles, and interpersonal and emotion-regulatory vulnerabilities. However, for reasons of power and collinearity, it is often infeasible to include more than a few potential moderators simultaneously in mixed effects models, even though numerous potential moderators exist. Regression tree methods can help to address this critical limitation of the models typically used in depression research.

Participants and procedure

Participants were undergraduate students at a large urban university, recruited using flyers on campus and from undergraduate psychology classes. To be included in the study, participants were required to have normal or corrected to normal vision and be fluent in English. Students received psychology course credit or were compensated in cash for participation. All participants provided written informed consent approved by the University’s Institutional Review Board (UIRB). The sample included 185 participants (56.7% female, 66.2% Caucasian/White, 19.1% African/American/Black, 13.3% Asian/Pacific islander, 0.6% Native American, 5.1% other race, and 8.3% Hispanic/Latino), with a mean age of 21.98 years (SD=5.90).

Participants completed a set of self-report questionnaires at baseline, and at four follow-up assessments (Times 2-5) spaced three weeks apart. This time frame was chosen to allow for sufficient variability in negative life events while enabling the modeling of short-term fluctuations in internalizing symptoms [20]. All questionnaires used in the present analyses were administered at baseline. During the follow-up assessments, participants completed measures of rumination, worry, anxiety, depressive symptoms and exposure to negative life events in the prior three weeks. At Time 5, participants were interviewed to verify that life events reported met a priori criteria and occurred within the correct three-week interval.

Participants were required to have completed at least one of the four follow-up assessments to be included in the present analyses, which yielded a final sample size of 185. The measures are the Cognitive Flexibility Inventory (CFI), Coping Flexibility (CF) (scale and questionnaire), Emotion Regulation Questionnaire (ERQ), Life Events Scale and Interview (LESI), Penn State Worry Questionnaire (PSWQ), Ruminative Response Scale (RRS) and Cognitive Responses to Life Events (CRLE). Table 1 shows the summary of measures and variables used in the models (Table 1).

| Measures | Variables used in the model |

|---|---|

| Cognitive flexibility inventory | Perceived alternatives |

| Perceived controllability | |

| Coping flexibility scale | Problem-focused coping flexibility |

| Emotion-focused coping flexibility | |

| Coping flexibility questionnaire | Coping flexibility |

| Emotion regulation questionnaire | Reappraisal |

| Suppression | |

| Life events scale and interview | Negative life events |

| Penn state worry questionnaire | Worry |

| Ruminative response scale | Brooding |

| Pondering | |

| Cognitive responses to life events | Negative cognitive styles |

Table 1: Summary of measures and variables used in models.

Statistical and machine learning algorithms

In this work, we empirically assessed the performance of a linear mixed effect model, RE-EM tree, and a Mixed Effects Random Forest (MERF) method on repeated measurements of depression severity. Mixed effects models are used in statistics and econometrics for longitudinal data, where observations are collected multiple times. They incorporate random effects parameters into models in addition to fixed effect terms. Random effects parameters account for heterogeneous data with random variability (e.g., both intra and inter-individual). As a result, mixed effects models allow stronger statistical conclusions to be made about correlated observations. The RE-EM tree is an extension of the regression tree for longitudinal data. RE-EM tree has been constructed through an iterative two-step process. In the first step, the random effects are estimated and in the second step, a regression tree is constructed ignoring the longitudinal structure. These two steps are repeated until the random effect estimates converge in the first step. MERF algorithm follows a similar approach to the RE-EM tree, except instead of a decision tree, a random forest is constructed in the second step to achieve improved prediction accuracy and address instability that often plagues a single tree [21].

Prediction model performance evaluation

Mean Absolute Error (MAE) was used to evaluate the performance of our models. The error was defined as the difference between a predicted depression score obtained from the models and the real depression score. MAE is the average of absolute errors.

We validated our model using the cross-validation technique, which is being used for the statistical generalizability of a trained model on an independent data set [22]. The main objective of all cross-validation schemes is to ensure that the evaluation step is performed with absolutely no bias and in a fair condition. We have evaluated the model using 10-fold cross-validation.

185 participants aged between 20 and 50 (M=21.97, SD=5.97) completed a set of self-report questionnaires at baseline, and at four follow-up assessments. The outcome measure Beck Depression Inventory (BDI) is continuous and spaced three weeks apart. BDI was measured for all 187 participants at baseline followed by 150 on the second visit, 137 on the third visit, 122 on the fourth visit and 113 remaining on the last visit. Descriptive statistics for all predictor variables are summarized in Tables 2 and 3. Missing values for predictors were not imputed as the proportions of missing values are negligible.

| Variables | Range | Mean | Standard deviation |

|---|---|---|---|

| BDI | 0-46 | 6.9 | 7.28 |

| Age | 18-50 | 22.05 | 5.8 |

| CFI Perceived alternatives | 0-84 | 64.65 | 10.46 |

| CFI Perceived control | 15-52 | 37.47 | 7.04 |

| Problem focused coping flexibility | 0-3.0 | 0.78 | 0.42 |

| Emotion focused coping flexibility | 0.0-2.0 | 0.47 | 0.36 |

| Reappraisal | 0-42.0 | 29.39 | 7.32 |

| Suppression | 0-28.0 | 14.68 | 5.48 |

| NegativeLifeEvents | 0-43.0 | 8.64 | 6.87 |

| Worry | 16-80.0 | 41.95 | 13.62 |

| Brooding | 5.0-20 | 10.22 | 3.78 |

| Pondering | 5.0-20 | 10.54 | 4.06 |

| Coping flexibility | 0-1 | 0.54 | 0.27 |

| Negative cognitive styles | 1-6.55 | 3.34 | 0.92 |

Note: BDI: Beck Depression Inventory; CFI: Cognitive Flexibility Inventory.

Table 2: Descriptive statistics for the predictor variables used in the model.

| Predictors | b | se | t | p |

|---|---|---|---|---|

| Sex | 2.08 | 0.98 | 2.13 | 0.03 |

| Age | 0.008 | 0.086 | 0.1 | 0.94 |

| CFI Perceived alternatives | -0.06 | 0.03 | -1.88 | 0.06 |

| CFI Perceived control | -0.1 | 0.05 | -1.62 | 0.1 |

| Problem focused coping flexibility | -1.49 | 0.62 | -2.4 | 0.01 |

| Emotion focused coping flexibility | -0.19 | 0.67 | -0.61 | 0.54 |

| Coping flexibility | -0.11 | 0.69 | -0.23 | 0.82 |

| Negative cognitive styles | 1.41 | 0.27 | 5.17 | <.001 |

| Reappraisal | -0.14 | 0.04 | -3.39 | 0.001 |

| Suppression | 0.12 | 0.06 | 1.37 | 0.17 |

| Negative life events | 0.42 | 0.05 | 7.75 | <.001 |

| Worry | 0.01 | 0.02 | 0.83 | 0.41 |

| Brooding | 0.26 | 0.09 | 3.17 | 0.002 |

| Pondering | 0.15 | 0.09 | 1.5 | 0.13 |

| Brooding-Negative life events | 0.08 | 0.03 | 2.69 | 0.01 |

| Pondering-Negative life events | -0.07 | 0.03 | -2.4 | 0.02 |

Note: CFI: Cognitive Flexibility Inventory; b: Unstandardized coefficient; se: Standard error; t: t value for significance; p: Significance level

Table 3: Fixed effects for MLM analysis with BDI as an outcome.

The performances of all models (LMM, RE-EM tree and MERF) were evaluated using Mean Absolute Error (MAE) and log-likelihood values. The models were validated by a 10-fold Cross Validation (CV) approach (Table 2).

The linear mixed-effects model was fitted to predict depression, in which fixed effects were estimated for the demographic and person-centered time-varying predictor variables with a random effect for intercepts. The analysis was conducted using R (R Development Core Team, 2009), with the lme4 package for LMM [23]. Several interaction terms were included in the model to get the advantage of the fullest model.

RE-EM tree analysis was conducted using the R package REEMtree and the model was fit with autocorrelation after performing log-likelihood testing for autocorrelation [24]. The tree building is based on the R function rpart. The algorithm splits a node where it maximizes the reduction in the sum of squares for the node. Such recursive splitting continues as long as the proportion of variability accounted for by the tree (called the complexity parameter (cp)) increases at least 0.001 and the number of observations in the candidate splitting node is greater than 20. After the initial tree is built, it is pruned back based on 10-fold cross-validation. First, the algorithm obtains the tree with final splits corresponding to the cp value with minimized 10-fold cross-validation error. Then, the tree with a final split corresponding to the largest cp value that has a 10-fold cross-validation error less than one standard error above the minimized value is determined as the final tree (the so-called one-SE rule).

Mixed Effects Random Forest (MERF) was implemented using open-source python code and the hyper-parameters were tuned using grid search. The parameters tuned were the number of trees, the maximum depth of the tree and the number of iterations. All the features were used to tune MERF’s parameters. In MERF the bootstrap sample is drawn on the observation level and predictions are based on the out-of-bag sample for avoiding the risk of overfitting.

Model performances using 10-fold schemes

We compared mean absolute error and log-likelihood values of LMM, RE-EM trees, and MERF using 10-fold cross-validation. Log-likelihood value is a measure of goodness of fit for any model. The higher the value, the better the model is. Consequently, MAE does not indicate under performance or over performance of the model (whether the model under or overshoots actual data). A small MAE suggests the model is great at prediction, while a large MAE suggests that your model may have trouble in certain areas. The MAE reported here is the average error across all observations in the testing set. The MERF model using 10-fold CV performed better with fit (log-likelihood: -1475) and compared to the RE-EM tree (log-likelihood: -1652) and LMM (log-likelihood: -1689). For 10-fold CV MAE values are 3.22, 3.19 and 3.13 for LMM, RE-EM trees, and MERF algorithm, the values are small given that the range for BDI is between 0 and 46. The improvements in predictive accuracy for MERF and RE-EM tree over LMM are 3% and 1% respectively. It indicates that the predictive power of MERF and RE-EM trees is comparable to LMM.

Significant predictors and interaction between predictors predicting concurrent depression severity

The traditional linear mixed effects model yielded eight statistically significant predictors of depression symptoms as shown in Table 3. The model was fitted to predict depression severity measures, in which fixed effects were estimated for age and all measures related to flexibility, cognitive style, emotion regulation and exposure to negative life events. The correlated nature of observations was accounted for through the estimation of random effects on subjects and time. We found that depression severity was increased significantly when an individual had elevated measures of negative life events, brooding, negative cognitive responses and interaction between brooding and negative life events. In contrast, depression severity is decreased significantly when an individual has elevated measures of problem-focused coping flexibility, reappraisal, and interaction between pondering and negative life events (Table 3).

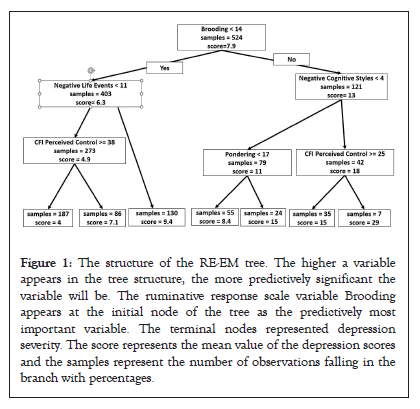

All predictor variables were entered at the same time and time-varying predictors were centered on each person’s own mean in the model. Only significant interactions are shown in Table 3. The RE-EM tree for depression severity represents relational rules and outcomes are presented in Figure 1. The tree was generated using all data. This output provided the additional benefit of conveying relevant clinical information about the model by indicating how a set of influential variables predict depression severity. The terminal nodes represented depression severity. Depression symptoms were best split by brooding, with subsequent groupings based on negative life events and negative cognitive responses. In the lower brooding group (Brooding<13), individuals whose negative life events were greater than or equal to 14 had higher average depression severity (average BDI score is 9.6) than individuals whose negative life events were less than 14 (average BDI score is 5.2). Further down to the leaf node, those with cognitive flexibility control scores less than 36 had less depression than those with cognitive flexibility control greater than or equal to 36. In contrast, in the group whose brooding was above the initial cut point (brooding>13), who also had negative cognitive styles greater than or equal to 4 and cognitive flexibility control scores less than 26 had the highest depression severity (average BDI score is 25.

Figure 1: The structure of the RE-EM tree. The higher a variable appears in the tree structure, the more predictively significant the variable will be. The ruminative response scale variable Brooding appears at the initial node of the tree as the predictively most important variable. The terminal nodes represented depression severity. The score represents the mean value of the depression scores and the samples represent the number of observations falling in the branch with percentages.

In summary, the RE-EM tree results identified two subgroups of individuals who were best divided based on their brooding score. People in the lower brooding group who were at the lowest risk for depression had fewer negative life events and higher perceived control. People in the higher brooding group had the highest severity of depression if they had more negative cognitive styles and less perceived control. These results highlight the utility of this technique for identifying which predictors are most important, for determining which subgroups are most (and least) likely to have problems with depression and for identifying which risk factors may be most important to target with interventions to reduce risk (Figure 1).

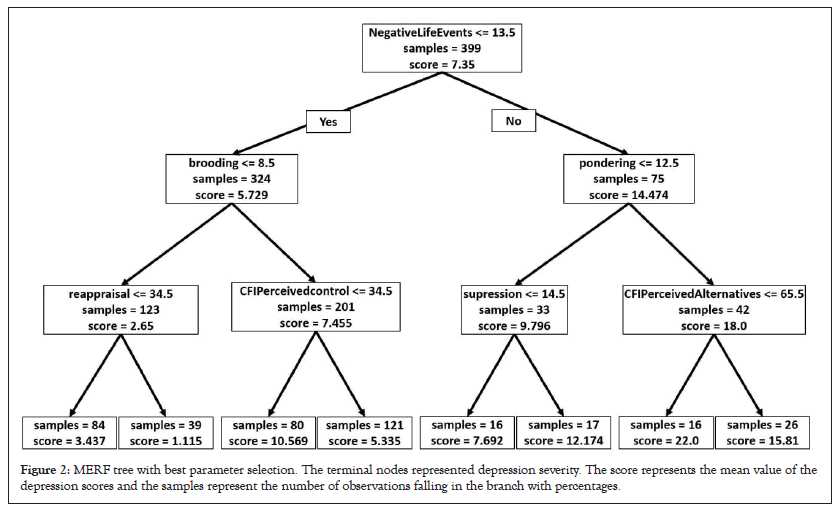

Next, a Mixed Effect Random Forest (MERF) model was fitted with all predictor variables, to produce highly accurate prediction due to its robustness. As a random forest algorithm creates many trees, it becomes difficult to visualize all trees. We showed the tree with the best parameter selection (number of trees=300, number of iterations=100, and maximum depth=3) inside the cross-validation procedure. Figure 2 showed that depression symptoms were best split by negative life events, with subsequent groupings based on brooding and pondering. Other important variables are cognitive flexibility and emotion regulation questionnaires. People in the higher negative life events group had the highest severity of depression if they had more ruminative responses and less cognitive flexibility. Similar behaviors are observed in people who have fewer negative life events (Figure 2).

Figure 2: MERF tree with best parameter selection. The terminal nodes represented depression severity. The score represents the mean value of the depression scores and the samples represent the number of observations falling in the branch with percentages.

In this paper, we demonstrated two machine-learning approaches for developing tree models in the presence of multi-level data. The two such methods presented here are the RE-EM tree and MERF. We compared the results with traditional LMM, and our study found that these ML methods performed better in predictive accuracy than LMM. The improvements in predictive accuracy for MERF and RE-EM tree over LMM are 3% and 1% respectively in line with earlier studies comparing mixed-effects decision tree algorithms and traditional mixed-effects algorithms [25-27]. LMM analysis found measures of negative life events, brooding, negative cognitive responses, cognitive flexibilities, problem-focused coping flexibility, and reappraisal to be associated with depression severity. RE-EM tree identified brooding as a root node that is, the most important initial factor for classification and negative life events, perceived control, negative cognitive styles, and pondering as internal nodes. In MERF, negative cognitive styles ranked highly, as well as worry, coping flexibility, negative life events, reappraisal, and suppression. The main advantage of the RE-EM tree and MERF over LMM is they can handle large data sets with many covariates, they are robust to outliers and collinearity problems, and they rank the covariates and detect automatically potential interactions between covariates.

One of the advantages of the RE-EM tree and MERF algorithms is identifying and interpreting the relationship between the predictor variables and outcome variables by automatic detection of interactions. For example, using RE-EM trees to predict future depression severity showed that individuals at the highest risk for more severe depression symptoms were those with high brooding (scores>14) who also had high levels of negative cognitive style (scores>4), particularly those with less perceived control (scores<25). Therefore, for individuals with higher levels of brooding, the variables key to determining depression risk appears to be negative life events, negative cognitive style and perceived control. This finding supports the cognitive catalyst model of depression, which proposes that the effects of negative thinking on depression may be amplified by perseverative thinking processes [28-30]. In contrast, individuals with low brooding who had fewer than eleven life events, particularly those with higher perceived control, had the lowest risk for future symptoms of depression. This finding aligns with traditional vulnerability-stress models of rumination as a diathesis for depression [31]. This example highlights one of the key ways that tree-based machine learning methods have utility: they can identify subgroups of individuals (e.g., those with higher or lower levels of brooding) for whom the key variables indicative of risk differ.

This kind of relationship is hard to obtain in traditional LMM without specifying (a priori) the interaction terms in the model. Thus, RE-EM or MERF has an advantage, particularly for data-driven approaches in which interactions are not specified in advance. Complex interactions in MERF are not easily accessible as it yields the results in a black box way, such that the individual trees are not visible. However, based on parameters selected by tuning the tree MERF provides the researcher with an index of variable importance, such that the relative magnitude of relationships with the outcome variable can be compared. Thus, if the researcher would like to understand the specific mechanisms that differentiate individuals on the response variable concerning the predictor variables, then the RE-EM tree may be preferable.

Furthermore, the RE-EM tree directly shows how the relevant patient characteristics should be combined to decide whether a patient is at risk for depression severity. It provides an interpretable summary figure which may assist in monitoring a health behaviour intervention. In clinical practice, the fitted RE-EM trees could be used for decision makings and to inform policy [32-35].

Predicting future outcomes based on historical observations is a long-standing challenge in many scientific areas. We found that the accuracy of predictions depends on the length of historical observations (the size of the rolling window). As the number of repeated observations used for training increases, the RE-EM tree or MERF model that accounts for random effects performs better. The rules from the RE-EM tree showed that brooding, negative life events and negative cognitive styles were the main predictors of depression for future time points in our sample. However, it is important to note that although we evaluated several potential risks and protective factors for depression, we did not include an inclusive list of all potential factors, which could be considered in future research. For example, a recent meta-analysis showed that dampening responses to positive affect may predict increases in depression over time.

There are several limitations to tree-based methods. For instance, the cut-points for nodes are done automatically by the package rather than by researchers. The significance of interactions cannot be tested compared to regression modeling. If a researcher wants to test an a priori hypothesis or evaluate the statistical significance of interactions, regression modeling is preferable to tree-based methods. There also were limitations to the example data that were used to demonstrate an application of machine learning. Data were collected among students; although college-age students are at heightened risk for depression and anxiety, further investigation using clinical samples (e.g., predicting the future onset of depressive episodes) is needed to improve the generalizability of our findings. Next, measures were self-reported and thus may be valid only to the extent that individuals are willing and able to report accurately. Nevertheless, the methods we describe here also can be used to integrate self-report with other modes of data collection in psychiatric research, such as clinical interviews, psychophysiology and other biological factors.

Early warning of future depression, which is a primary clinical problem addressed in this study, is important for targeting high-risk patients for monitoring and intervention. The data-driven machine learning methods can help predict future depression changes. These models are interpretable, transparent and easy to deploy in clinical practice. Lagged training and validation structures can be used to further investigate the temporal effects of risk factors over successive patient visits. For example, regularly-schedule computerized assessments could be fed into ML models to predict sessions when patients are at higher-than-average risk of experiencing an increase in symptoms over the subsequent weeks. This information then could be used to direct resources toward individuals at these moments (e.g., additional interventions, such as medication, skills, and booster sessions) to prevent depression relapse. In conclusion, this manuscript demonstrated that random effects machine learning models can take advantage of increasing sample sizes and dependencies between the observations to generate more robust and accurate models.

Data collection was supported by grants to Jonathan P. Stange from the National Institute of Mental Health (F31MH099761), the Association for Psychological Science, the American Psychological Foundation, and the American Psychological Association. Jonathan P. Stange was supported by grant 1K23MH112769-01A1 from NIMH.

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

[Crossref] [Google Scholar] [PubMed]

Citation: Bhaumik R, Stange J (2023) Utilizing Random Effects Machine Learning Algorithms for Identifying Vulnerability to Depression. J Dep Anxiety. 12:516.

Received: 03-Jul-2023, Manuscript No. JDA-23-25406; Editor assigned: 05-Jul-2023, Pre QC No. JDA-23-25406 (PQ); Reviewed: 19-Jul-2023, QC No. JDA-23-25406; Revised: 26-Jul-2023, Manuscript No. JDA-23-25406 (R); Published: 04-Aug-2023 , DOI: 10.35248/2167-1044.23.12.516

Copyright: © 2023 Bhaumik R, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.