Journal of Geology & Geophysics

Open Access

ISSN: 2381-8719

ISSN: 2381-8719

Research - (2024)Volume 13, Issue 1

Unmanned Aerial Vehicles (UAV) have emerged as a solution to day to day survey tasks, allowing users to visualize phenomena in real-time. This paper explores the capabilities of UAV or drones in the collection of accurate, geo- tagged data quickly, including photogrammetry software processes to deliver standardized data output. In order to explore the capabilities of UAV, Gatu Township in Centenary, Muzabani District of Zimbabwe was chosen from the national mapping topographic series. This study demonstrates the efficiency of data collection using drones, generate 2D orthomosaics in real time, so that analysts can easily visualize land cover and identify any changes, map and model large areas to produce data for 2D and 3D models. The recent development of innovative optical image processing has further lowered the costs high resolution topographic surveys.

Photogrammetry; Unmanned Aerial Vehicles (UAV); 3D point cloud; Geographic Information Systems (GIS)

This article provides an overview on drone survey or photogrammetry, Unmanned Aerial Vehicle (UAV)/drone preparation, and the end products of image processing which include orthomosaic, 3D point cloud and Contour map. UAV/ drone is an aircraft flown remotely from the ground by way of a preplanned flight plan, whilst 3D point cloud is 3D geometric information, mainly acquired through laser scanning and photographic survey technologies to generate Digital Surface Models (DSM), Digital Elevation Models (DEM) and Digital Terrain Models (DTM) [1].

Various benefits of drone photogrammetry have been noted by various scholars in the recent decades. These include managing land cover and land use change, allowing users to visualize results immediately using real-time orthomosaics or high resolution maps during post processing [2]. The use of affordable drone and image processing software solutions that automate data collection and processing has been noted as viable, reducing the costs associated with field work and inaccessible remote areas or locations with obstructions [3].

Photogrammetry produces maps directly from photographic images by identifying, symbolizing, and capturing elevation manmade and natural features visible on the imagery [4], providing GIS with essential information. As a result, the use of drones and related image processing software help to keep records that can easily be used for regular land cover comparisons so that users can monitor changes dynamically.

Overview on drone application

Planning a survey generally starts with collecting available geographic information about the area of interest. However, the process of finding and incorporating this data from multiple sources is time consuming, even if available online. In that regard, the uses of drones has gained momentum, aided by reduced costs and increase the reliability of the technology. This section focuses on the potential uses of drones, their benefits and limitations.

Drone application: Drone surveys can be used for various purposes, including; dams and bridges inspections [5], land surveying [1]; construction monitoring [6], slope monitoring [7,8], and urban traffic monitoring [9]. A desktop application for GIS drone mapping such as ArcGIS Drone2Map software has been used to capture drone data using site scan flight or any third party drone data collection app [3]. After the flight, the user creates a project to download the drone imagery. Ground control points are added and 2D and 3D outputs are processed and created.

With the 2D or 3D outputs, measurements can be made, changes tracked, and other analysis can be made. Advanced analysis can also be done in ArcGIS Pro, utilizing a range of tools such as advanced spatial, temporal and spectral analysis, as well as data management [3].

Benefits of drone survey

Improved quality and accuracy: Drones are essential due to their ability to capture reality, that is, images with coordinates and elevation, thereby ensuring quality through capturing many images and the use of sensors such LIDAR, infrared cameras and multi-spectral sensors [1]. Software such as Pix4D allows users to add Ground Control Points (GCPs) to help improve data accuracy.

Cost effective: The use of AUV or drones saves costs, reducing the need for several surveyors and costs related to ground surveying, including the software used for drone surveying.

Saving time: Given that drones make it easier to convey data in real-time, while automation of data processing and export is also made easier by software such as DroneDeploy, Pix4D, and thereby allowing users to save time and costs [3].

Improved work safety and coverage: Given that drones are controlled remotely, they are safer to use, and have the ability to reach inaccessible areas, including areas dangerous to human life.

Drone analytics: Drones allow users to view the natural color, thermal infrared or multispectral datasets, perform 2D and 3D measurements such terrain and spectral profiles, as well as volumetric calculations, which allow quick data analysis [4].

Integration with other GIS software: Image processing software is part of GIS system, which provides access to tools for geospatial analysis. As such, end products from drone software can be exported and opened easily in ArcGIS desktop or ArcGIS Pro for integrated management. ArcGIS Online or ArcGIS Enterprise can also be utilized to publish or share the imagery data products and their elevation datasets [5].

Study area

The study was conducted at micro-scale using drone or Unmanned Aerial Vehicle (UAV). Images were taken from Gatu T/Ship located in Centenary, Muzarabani District, between latitude -16°43'22.40" S and longitude 31°06' 52.63" E.

Materials

DJI Phantom4 drone, Google Earth Pro, Pix4D mapper version 4.7.5 and ArcGIS 10.8 were used in the study. ArcGIS is geographic information software which allows users to manipulate and present data from Pix4D further.

Methodology

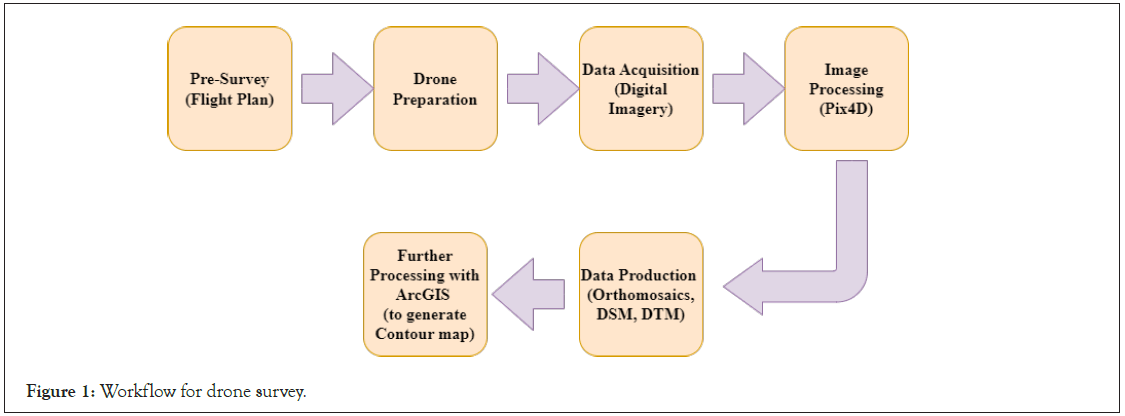

The study consists of five stages (Figure 1) which are: Pre-survey, drone preparation, data acquisition, image processing and further processing with ArcGIS.

Figure 1: Workflow for drone survey.

Pre-survey using Google Earth Pro: Various people have used Google Earth to search for a famous landmark or their location address; however, many surveyors have utilized the online database as a valuable tool for visualizing the layout and terrain of any area or region of interest [4]. Therefore combining satellite, aerial, 3D, and street view images, Google Earth provides access to a wealth of knowledge remotely.

In order to generate the boundary for the study, Muzarabani District topographic map from the Surveyor General Department was used for the study. The topographic map was digitized and georeferenced in QGIS and Gatu Township was selected for the study. A KML file for the township boundary was also created in Google Earth Pro as indicated in Figure 2.

Figure 2: Location of the study area in ArcMap V 10.8 and the generated polygon of the study area in Google Earth Pro.

The ability to survey site virtually is a major advantage of Google Earth Pro which has become an integral part of GIS and surveying. Thus surveyors can make use of the area of interest from satellite images and aerial photos, and enhance the view with GIS information, thereby preparing a cost estimate and survey plan based on real-time data available. Google Earth’s range of capabilities includes zooming through multi-resolution imagery, measuring linear distance and area, overlaying section lines and boundaries, and geo-tagging photos [10]. Google Earth allows surveyors to identify access points, survey marks, obstacles and vegetation coverage which influence cost estimates, helping to schedule the necessary people and equipment.

Drone preparation and image capture: Weather was checked before flying a drone, to make sure there was no rain, fog, snowfall, or strong winds on the day, and ground points were set up. The control points were distributed throughout the study area. Overlap and flight mode were also set up, with a sweeping flight path back and forth over the entire site. Survey flight plan was created by importing a KML file of the area under study. After pressing the take-off button, the drone took off autonomously and captured 118 images.

Image processing with Pix4D mapper: After the drone flight, images were uploaded to post-processing software (Pix4D mapper) that generates a 2D, 3D map or an orthomosaic map. Images taken from the drone, 118 in total, were uploaded in the software for processing. Collected images were checked for radiometric distortions.

Image properties: On image properties, Geolocation Accuracy was set as “Standard” and coordinate system as “WGS 1984”. The Output Coordinate System was set at “Auto-detected WGS 84/ UTM Zone 36S and processing template as “Standard-3D Maps. During initial processing the following tiers were generated as shown in Table 1 below.

| Image Scale | Multiscale, 1/4 (Quarter image size, Fast) |

|---|---|

| Point density | Low (Fast) |

| Minimum number of matches | 3 |

| 3D Textured mesh generation | Yes |

| 3D Textured mesh settings | Resolution: Medium Resolution (default) |

| Advanced: 3D textured mesh settings | Sample Density Divider: 1 |

| Advanced: Image groups | group1 |

| Advanced: Use processing area | Yes |

| Advanced: Use annotations | Yes |

| Time for initial processing | 04 m: 35 s |

| Time for point cloud densification | 04 m: 52 s |

| Time for point cloud classification | 01 m: 50 s |

| Time for 3D textured mesh generation | 03 m: 46 s |

| Average Ground Sampling Distance (GSD) | 3.13 cm/1.23 inch |

| Area covered | 0.397 km²/39.6549 ha/98.0401 acres |

Table 1: Point cloud densification processing options in Pix4D.

Computed images/GCPs/manual tie point’s positions: From Figure 3, the green line follows the position of the images in time starting from the large blue dot (Pix4D).

Figure 3: The top view of the initial image position in Pix4D.

Absolute camera position and orientation uncertainties

Absolute camera position and orientation uncertainties is shown in Table 2. Generating orthomosaics in Pix4D is represented in Figure 4.

| X (m) | Y (m) | Z (m) | Omega (degree) | Phi (degree) | Kappa (degree) | |

|---|---|---|---|---|---|---|

| Mean | 0.120 | 0.114 | 0.199 | 0.031 | 0.035 | 0.018 |

| Sigma | 0.030 | 0.025 | 0.040 | 0.003 | 0.009 | 0.003 |

Table 2: Absolute camera position and orientation.

Figure 4: Orthomosaic in Pix4D.

Figure 5 shows number of overlapping images computed for each pixel of the orthomosaic. Red and yellow areas indicate low overlap for which poor results may be generated. Green areas indicate an overlap of over 5 images for every pixel. Good quality results is generated as long as the number of key point matches is also sufficient for these areas.

Figure 5: Overlapping images in Pix4D. Note:  Overlap of over 5 images for every pixel.

Overlap of over 5 images for every pixel.

Orthomosaic and corresponding Digital Surface Model (DSM)

The 3D textured mesh is a reproduction of the edges, faces, vertices and texture of the area captured by the UAV. The model is useful for visual inspections and external stakeholder involvement (Figures 6 and 7). The information on numbers of generated tiles, the number of 3D densified points and average density is summarized in Table 3.

| Number of Generated Tiles | Number of 3D Densified Points | Average Density (per m3) |

|---|---|---|

| 1 | 1200445 | 9.06 |

Table 3: Result of the generated outputs.

Figure 6: Orthomosaic map of study area.

Figure 7: The corresponding sparse Digital Surface Model (DSM) before densification.

Absolute geolocation variance

From the Table 4, Min Error and Max Error represent geolocation error intervals between -1.5 and 1.5 times the maximum accuracy of all the images. Columns X, Y, Z show the percentage of images with geolocation errors within the predefined error intervals. The geolocation error is the difference between the initial and computed image positions. Worth noting is that the image geolocation errors do not correspond to the accuracy of the observed 3D points.

| Min Error (m) | Max Error (m) | Geolocation Error X (%) | Geolocation Error Y (%) | Geolocation Error Z (%) |

|---|---|---|---|---|

| - | -15.00 | 0.00 | 0.00 | 0.00 |

| -15.00 | -12.00 | 0.00 | 0.00 | 0.00 |

| -12.00 | -9.00 | 0.00 | 0.00 | 0.00 |

| -6.00 | -3.00 | 0.00 | 0.00 | 0.00 |

| -3.00 | 0.00 | 49.15 | 45.76 | 55.08 |

| 0.00 | 3.00 | 50.85 | 54.24 | 44.92 |

| 3.00 | 6.00 | 0.00 | 0.00 | 0.00 |

| 6.00 | 9.00 | 0.00 | 0.00 | 0.00 |

| 9.00 | 12.00 | 0.00 | 0.00 | 0.00 |

| 12.00 | 15.00 | 0.00 | 0.00 | 0.00 |

| 15.00 | - | 0.00 | 0.00 | 0.00 |

| Mean (m) | -0.000000 | -0.000000 | 0.000000 | |

| Sigma (m) | 0.145862 | 0.142033 | 0.644717 | |

| RMS Error (m) | 0.145862 | 0.142033 | 0.644717 | |

Table 4: Absolute geolocation variance.

Relative geolocation variance

Images X, Y, Z in Table 5 represent the percentage of images with a relative geolocation error in X, Y, Z.

| Relative Geolocation Error | Images X (%) | Images Y (%) | Images Z (%) |

|---|---|---|---|

| (-1.00, 1.00) | 100.00 | 100.00 | 100.00 |

| (-2.00, 2.00) | 100.00 | 100.00 | 100.00 |

| (-3.00, 3.00) | 100.00 | 100.00 | 100.00 |

| Mean of Geolocation Accuracy (m) | 5.000000 | 5.000000 | 10.000000 |

| Sigma of Geolocation Accuracy (m) | 0.000000 | 0.000000 | 0.000000 |

Table 5: Relative geolocation variance.

Geolocation orientational variance

From Table 6, Geolocation RMS error of the orientation angles is given by the difference between the initial and computed image orientation angles.

| Geolocation Orientational Variance | RMS (degree) |

|---|---|

| Omega | 3.195 |

| Phi | 2.414 |

| Kappa | 4.684 |

Table 6: Geolocation orientational variance.

Bundle block adjustment

The correlation between camera internal parameters is determined by the bundle adjustment (Table 7).

| Number of 2D key point observations for bundle block adjustment | 251883 |

| Number of 3D points for bundle block adjustment | 99122 |

| Mean reprojection error (pixels) | 0.092 |

Table 7: Bundle block adjustment: Internal camera parameters, S.O.D.A._10.6_5472x3648 (RGB); Sensor dimensions, 13.133 (mm) × 8.755 (mm).

Integration with GIS

This study demonstrates the integration of drone survey software with GIS, as shown in Figure 8, by generating contour map of the study area.

Figure 8: Contour map in ArcGIS 10.8 Desktop.

The DSM model generated by Pix4D was exported into ArcGIS desktop 10.8, creating contour lines map, providing the user with better understanding of the surface of the area captured by the drone. By exporting the DSM into ArcGIS, contours with elevation of 10 m and resolution 100 cm were generated.

In this study, a survey was performed to explore the capabilities of UAV. Focus was on drone preparations, image capture and image processing using Pix4D, drone imagery end products and integration with GIS for further analysis. Also noted were the application of drone survey ranging from dams and bridges inspections, land surveying, construction monitoring to slope monitoring. From this study, it is noted that digital photogrammetry permits the reconstruction of topography using algorithms that can provide 3D spatial information of phenomena. Further researches may focus on analyzing how drones could help in explore vacant land and safe zones in the study area.

The article is dedicated to Geomatics Engineers and Geospatial Analysts, with special thanks to the University of Zimbabwe for surveying instruments and permission to use photographs and data.

The author declares that there are not that could have influenced the work in this paper.

Citation: Chipatiso E (2023) Surveying and Geographic Information Systems (GIS): Exploring the Capabilities of Unmanned Aerial Vehicles (UAV)/Drones. J Geol Geophys. 12:1153.

Received: 26-Sep-2023, Manuscript No. JGG-23-27180; Editor assigned: 28-Sep-2023, Pre QC No. JGG-23-27180 (PQ); Reviewed: 12-Oct-2023, QC No. JGG-23-27180; Revised: 19-Oct-2023, Manuscript No. JGG-23-27180 (R); Published: 27-Oct-2023 , DOI: 10.35248/2381-8719.24.13.1153

Copyright: © 2023 Chipatiso E. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.