Mini Review - (2025)Volume 14, Issue 1

Artificial Intelligence: The Opportune Positivism

Mauro D. Rios*Abstract

AI is the most dynamically evolving technology in history, and this context presents us on the one hand with a scenario of ten years of research, development, trial and error, and on the other hand with an uncertain future, where human beings will be for the first time in our history, a community that will possibly end up competing with another community of non-human entities, for control of the means of production, civic and educational instruction.

AI challenges us as humanity, and that humanity must be posed in terms of taking action to guarantee rights, and to guarantee us superiority over AI in something that is unique to human beings, consciousness. AI behaves like water between stones, it will always make its way, as will technology in general, its advance is unstoppable, to ban it would be madness, to regulate it as a technology would be a mistake, technology is not regulated. Regulation should be oriented towards foreseeing the contexts and cases of use, and of course it is necessary to have rules that punish behaviour that uses AI in a pernicious way.

Keywords

AI Intelligence; Technology; Education; Government; Policy; Governance; IT

Introduction

The holistic view that is achieved by involving all sectors of society (government, private sector, academia, social sector, others), i.e., involving all possible actors and stakeholders involved in the use and training of AI, makes us assume a false sense of security with regard to achieving unbiased development. Undoubtedly, AI will always have biases because humans and different groups of people have biases [1].

AI is undoubtedly synonymous with trillions of data, and if that is so, we should ask ourselves where there are trillions of data on which we do not have the human capacity to normalize, classify or find information in a simple way. With the capabilities to understand natural language, we could train AI in government offices, with the trillions of documents, and the AI would take care of everything [2-4].

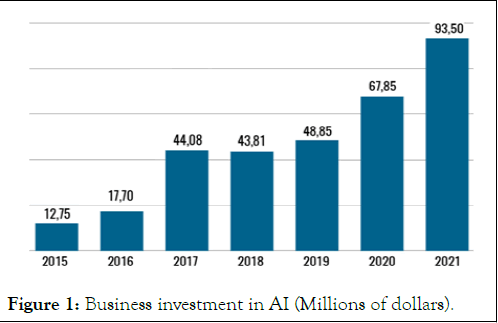

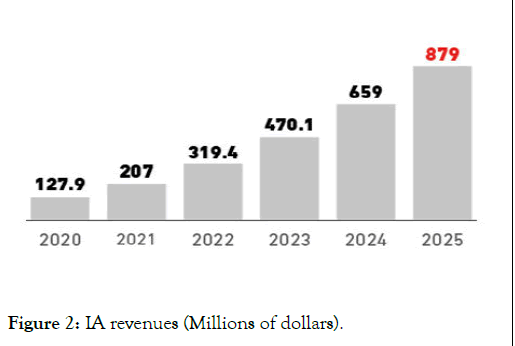

We are approaching a point where we will have to evaluate signing a new social contract, to redefine what are rights, duties and who can have them, but even more, we will be forced to redefine the concept of humanity, what we understand today as an individual, the definition of person, autonomous entity and some will even want to redefine the concept of being alive (Figures 1 and 2) [5,6].

Figure 1: Business investment in AI (Millions of dollars).

Figure 2: IA revenues (Millions of dollars).

Literature Review

Artificial intelligence arouses fears and worries, which is natural with every new technology, every new invention. But the difference with other technologies is not the newness.

In Greek mythology there were stories of automatons created by Hephaestus, the God of technology, those automatons could move and speak like humans. In the 13th century, the Spanish philosopher Ramon Llull developed a system of mechanical logic based on symbols and diagrams. In the 17th century, the mathematician Gottfried Wilhelm Leibniz already spoke of a universal language of symbols to solve problems [7].

Between those ancient times and the modern era there were many antecedents of AI, but let us take the following as the starting point of modern AI:

In 1931 mathematicians Bertrand Russell and Alfred North Whitehead, in their work "Principia Mathematica", lay the foundations of type checking and type inference algorithms. Shortly afterwards, in 1939, John Vincent Atanasoff and Clifford Berry created the Atanasoff-Berry computer. Only ten years later, in 1949, Edmund Berkely wrote "Great Minds: Or Machines That Think", which already mentioned that machines could handle large amounts of information at high speed [8-10].

And then we come to 1950, which is considered the year when AI took concrete roots.

That year Claude Shannon publishes "Programming a Computer for Playing Chess" and Alan Turing makes fundamental contributions to the development of AI such as the test that later became known as the Turing Test. Note: This test is obsolete today.

And of course, in 1955 John McCarthy introduces us for the first time to the concept or term "artificial intelligence". That same year Allen Newell, Herbert Simon and Cliff Shaw created the first artificial intelligence software. Just three years later, in 1958, John McCarthy developed LISP, the specific programming language for artificial intelligence research.

Another concept of which we may lose the reference of its birth is that of machine learning, which was coined by Arthur Samuel in 1969.

And the decade of the 60's passed with many advances, but perhaps one that we should mention is that of Terry Winograd, who in 1968 created the first natural language software, the so called SHRDLU. This milestone is perhaps the starting point of what today is ChatGPT, Bard and others.

Fast forward to another milestone, in 1984 Roger Schank and Marvin Minsky warn of the second winter of AI.

AI winter is a term used to refer to periods of low activity and interest in artificial intelligence research and development. These periods are often marked by reduced investment, lowered expectations and a lack of significant advances in the technology. AI winters have been 1974-1980 and 1987-1993.

The context described above gives us a backdrop of decades of research, development, trial and error, meaning that AI is not a weekend experiment, it has some of the most inquiring minds behind it and some of the coolest talents.

The first thing we must do is trust in humanity, the second thing we must do is not to think that the problems are only technological issues. Technology will always solve its dilemmas, technical problems have a limited life, and we will solve and evolve both the software and the hardware [11].

AI challenges us as humanity, and that humanity must be posed in terms of taking action to guarantee rights and to guarantee us superiority over AI in something that is uniquely human, self awareness. AI is incapable of being aware of itself, its actions or its tasks.

It is important to remember that the AI as the field of information science dedicated to giving software automation, characteristics that simulate the cognitive abilities of the human being, applying these simulations to problem solving and manifesting the results as moving actions, written, or spoken language, graphic representations or emerging data.

AI behaves like water between stones, it will always make its way, as will technology in general, its advance is unstoppable, to prohibit it would be madness, to regulate it as a technology would be a mistake, technology should not be regulated. What we must regulate are the contexts, the application of AI and its development, it must be regulated in order to protect freedoms and rights.

Today, no one would think of regulating the parts of a car engine, but the use of the car itself, the context and the scenario in which it operates, have been regulated.

It is necessary to regulate AI by positivism, not by the causality of the negative. It would be like regulating matches from the perspective of arsonists. Regulation should be oriented to foresee the contexts and cases of use, and of course it is necessary to have rules that punish behaviours of pernicious use of AI.

Specialists have agreed to provide advice to legislators to regulate in what we call anticipatory governance on AI. Anticipatory governance is a concept that refers to the ability to anticipate and prepare for future changes and challenges, i.e. it seeks to generate a regulatory framework that we can all agree on that is generalist, foreseeing scenarios that emerge from foresight.

Anticipatory governance seeks to improve the quality of public management, reduce uncertainty, generate better policies and face strategic and existential threats, both local and global, based on a anticipation that allows us to have regulations that are prepared to face those scenarios that today are unknown or unpredictable. AI invites us to try out this governance model because of its incredible speed of evolution, which step by step conditions the evolutionary steps of humans [12].

To sketch the first outlines of an ethical, human-centred regulation of known use cases and those where laws will have to provide for what are today unimaginable scenarios, we often mention the multi-stakeholder strategy.

The holistic view that is achieved by involving all sectors of society (government, private sector, academia, social sector, others), i.e. we will add all possible actors and stakeholders involved in the use and training of AI, makes us assume a false reassurance of achieving unbiased development. This can be a fallacy. Undoubtedly, AI will always have biases, because humans and different groups of people have biases, and human behaviour is and will be biased.

The sectors of society that we involve bring together a problem, the call, in general, is always answered by the same actors or individuals, those with the initiative of militancy. So, each sector will be represented by the same institutions and possibly by the same people. The participation of multiple actors will then result in a naturalized bias of its own, which we will transfer to AI training.

So biased are we that AI cannot reach unbiased conclusions when it sifts through the trillions of historical and current data it uses to construct its responses, and we must induce it, by modifying algorithms, to respond in an inclusive, non-racist, non-apocalyptic way.

In terms of employment, we need to understand that emerging technology does not necessarily generate job losses, but rather the need to retrain workers. This is because new and more specialized jobs and professions are emerging.

However, it is urgent to note that not all countries are ready to take on the irruption of AI, because the basis lies in education. Literacy rates and student dropout rates at secondary and university levels are key. Those who do not have at least basic initial and secondary education are probably not working today in a specialized or professional job, and the use they will be making of technology will be scarce. Unfortunately, these citizens will not have all the skills to reconvert their jobs and work when AI begins to perform the same tasks.

Results and Discussion

Someone who made candles could become someone who made filaments for electric lamps, but someone who answers the phone in a call centre, with no basic or secondary education, will find it very difficult to convert when AI takes control of call centres. Today, AI is being used to answer hotlines for people with depression, with very good results. It has been trained specifically for this task and has managed to replace a human being to empathise with those who present signs of depression.

In the past this task had been delegated exclusively to psychologists, today they are no longer needed.

AI is undoubtedly synonymous with trillions of data, and if that is so, we should ask ourselves where there are trillions of data on which we do not have the capacity to normalize, classify or find information in an effortless way. The answer to this is an easy decision, there are trillions of data in public administration offices, in government offices where civil servants are drowning in tons of paper. Sorting through it, getting it searched productively and in a timely manner, is a near-impossible venture.

This is where AI can change the negative reality into a positive one. With the capabilities to understand natural language, we could very well train AI in government offices, with the trillions of documents, the AI would take care of everything. By simply talking to the AI, we could ask it for all the documents that mention a topic or ask it for the set of documents related to each other, very useful for finding laws, for example. This is just a sample of AI's contribution to transparency and access to public information.

One of the greatest fears is the fact that AI can mimic with complete precision the production of text, images and natural language, including the ability to dialogue and speak fluently, so it is impossible to discern whether behind the curtain there is a person or an AI.

This is a description of reality for which there is no possibility of a medium term solution. Those who seek certainty about whether behind the curtain is an AI or a person will not succeed. And unfortunately, there are no good predictions that in the future there will be a reliable way to replace the Turin test [13].

Neither tool developed so far can tell us with absolute certainty that something was produced by an AI, it can only suspect it.

So we have started talking about watermarking, this technology is already being tested by some companies for their AI, but it clearly does not completely solve the problem. For example, if AI is used to produce several parts of a larger product, and then one person builds that larger product, the watermarks could either cease to be effective or disappear from the final product made up of multiple parts. And the dilemma of whether the final product is attributable to the person, or the AI is back in play.

And all this feeds further fears, so we come to another dilemma. The conception of trusted sources has broken down, AI has compromised trust and forces us to rethink the idea of security and certainty. This will force us to change our behaviour and to take doubt as a premise and suspicion as the initial conclusion of all things. The government, but also the technology giants, will have to provide new reliable sources of verification and validation. In this task, academia and scientific institutions will play a key role in providing methodology, processes, and procedures.

It is important to discuss the issue of transparency of algorithms. The demand to make algorithms transparent to AI developers is a logical, reasonable request, but unlikely to be met in full.

Today we have AIs developing AIs, and we have algorithms developing other algorithms. Thus, many AIs develop algorithms in real time to solve a problem, elaborate an answer or predict actions. So it is not possible to get such algorithms beforehand.

However, the starting point is always the human being, so the original algorithm was developed and trained by humans, and it is on that algorithm that we can apply regulation and attribute responsibility. But super artificial intelligence, or artificial general intelligence, is just around the corner. It is impossible to predict when we will reach it. But once we live with a super artificial intelligence, whose simulation of our cognitive capacities is almost perfect, the world will be absolutely different [14].

It will then be time to sign a new social contract, to redefine what are rights, duties and who can have them, but even more, we will be forced to redefine the concept of humanity, what we understand today as an individual, the definition of a person, an autonomous entity and some will even want to redefine the concept of being alive.

We may or may not agree to rethink these ideas and definitions, but surely many experts, scientists and ordinary people will be raising a discussion about it [15].

Conclusion

AI is undoubtedly the greatest industrial revolution humanity has ever seen, and is perhaps the most beneficial technology we will see for generations to come. The obscurantism that some collectives and corporations pretend to attribute to AI is unjustified. Like all technology, AI is neutral, it is its use, and therefore the human being involved in that use, who attributes malevolence or goodness to it.

We are frightened by the speed at which AI evolves, perhaps for the first-time humanity is unable to catch up with technology to contain it, regulate it, or understand it with the speed that it has done throughout its history with all the technological innovations and disruptions, but this should not discourage us.

If humanity could have enormous talent to create AI, it will undoubtedly be those same talents that will achieve its creation.

Let us always keep a positive outlook on AI, because it deserves it.

References

- Khan SB, Namasudra S, Chandna S, Mashat A. Innovations in artificial intelligence and human-computer interaction in the digital era. Elsevier. 2023.

- Divino S. After all, artificial intelligence is not Intelligent: In a search for a comprehensible neuroscientific definition of intelligence. Opin Jurid. 2022;21(46):1-21.

- Consuegra LD, Vasquez PA, Perez AM. Artificial intelligence algorithms based on socio-behavioral profiles for intelligent customer segmentation: Case study. Eng Compet. 2023;25(3).

- Grau GI. Defective software and algorithms: some considerations on the responsibility of the developer of software or artificial intelligence systems. IDP. Int Law Pol Mag. 2023;4(38):1-2.

- Leibniz, Wilhelm G, Von F. Universal language, characteristics and logic–ISBN 9788490451083-Marcial Pons Librero. 2023.

- Monaca M. Artificial intelligence and economics: The key to the future. Springer. 2023.

- McCarthy J, Lifschitz V. Formalizing commonsense: Papers by John McCarthy. Greenwood Publishing Group Inc. 1990.

- McCarthy J. Artificial intelligence, logic, and formalising common sense. Machine learning and the city: Applications in architecture and urban design. 2022:69-90.

- Santacruz DM, Osorio DF. Intellectual property law put to the test: Artificial intelligence with inventive capacity. Intang Prop Mag. 2023;35:147.

- Piercy J. Symbols: A universal language. Michael O'Mara books. 2013.

- Ríos MD. Artificial Intelligence: When technology is the smallest of the paradigms. Medwin Publishers. 2023.

[Crossref]

- Mauro R. Chimera and ravings about artificial intelligence. Academia EDU. 2023.

- Navarro JR, Perez Y, Bravo DD. Incidences of artificial intelligence in contemporary education. Comm Sci Mag Comm Edu. 2023;31(77):97-107.

- Schmidpeter R, Altenburger R. Responsible artificial intelligence. Springer. 2023.

- Cruz DV, Cesar NP, Ruiz JL. Handbook of research on applied artificial intelligence and robotics for government processes. IGI Global. 2022.

Author Info

Mauro D. Rios*Citation: Rios MD (2025) Artificial Intelligence: The Opportune Positivism. Global J Eng Des Technol. 14:242.

Received: 19-Sep-2023, Manuscript No. GJEDT-23-27281; Editor assigned: 21-Sep-2023, Pre QC No. GJEDT-23-27281 (PQ); Reviewed: 05-Oct-2023, QC No. GJEDT-23-27281; Revised: 18-Jan-2025, Manuscript No. GJEDT-23-27281 (R); Published: 25-Jan-2025 , DOI: 10.35248/2319-7293.25.14.242

Copyright: © 2025 Rios MD. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.